Manual PDF Export

1. Management

Congratulations on your decision to implement advanced analytics in your plant!

In order to get the most out of the experience of using analytics, we suggest that you take a little time to think about the managerial process of integrating this solution into your existing organization. Using a new capability is much more complex than installing a new software package.

In your planning, please consider such topics as

- The software installation will need IT experts to install the programs and also to setup the network permissions.

- Engineering experts will select the data to be used for modeling.

- Process experts will study the model, fine tune it, and decide its fitness for use.

- The end users of the software will need to be trained on how to use it.

- Management will need to decide on the usage policy.

- Action plans need to be established by change management in order to get practical use from the results of analytics.

- Feedback on the benefits earned and lessons learned will need to be communicated within your organization.

- Communication with algorithmica takes place when it appears that the model or the software needs to be adjusted or customized.

We therefore encourage you to think about this as a project management task in which there are stakeholders, a leadership team, a project manager and team members. We will not go into detail on the topic of project management in this manual but assume that your organization has that competency already.

The introduction of an analytics program will cause changes to the work environment for a number of people. Getting them used to the new way of working and integrating the technology into the work flow will be an adjustment. Some change management will need to occur for this to take hold in the organization.

1.1. Project Plan

The project of introducing the software to your organization has six phases:

- The software needs to be installed and configured. We discuss this in detail in the chapter on installation.

- The tags that the model will look at must be selected and some information about each tag defined. This information will be introducted in the chapter on installation and detailed in the how-to chapter.

- Historical data must be prepared and uploaded for the tags selected. The model will be learned on the basis of this historical data.

- The model will be learned and assessed for its quality and suitability. Some fine-tuning of model parameters may be necessary at this point in order to get the model to accurately represent the situation and solve the problem.

- After the model is suitable, the software can be put into productive use by connecting it to the source of live data and cyclically computing the answer to the posed question.

- It is at this point that the project of setting up the application is over but the change management task of integrating the solution into the workflow of the organization begins.

1.2. Organization

We highly recommend forming a project management team for the purpose of introducing advanced analytics software into your organization. As introduced above, this project will require a variety of technical and managerial roles to participate and the project requires a number of decisions to be made that will require these roles to agree.

The project should have a project manager who takes responsibility for getting all the work done and who has the authority to instruct the project team members to provide their respective inputs. The project can be managed by KPIs that the software provides such as the number of good optimization suggestions, the number of such suggestions actually implemented, the accuracy of the models and so on.

The phases of the project were introduced above. Phases 1 and 5 are dominated by IT and will need cooperation from that department. Phase 3 is mainly a task for the administrator of the data historian and may involve cooperation from the IT department.

Phases 2 and 4 will require deep process knowledge and understanding. We recommend conducting phase 2 as a workshop attended by process managers, engineers and operators. These persons can then discuss in detail the information required (described in the chapter on know-how). Phase 4 is the assessment of model goodness of fit and fine-tuning in case the goodness needs to be improved. We recommend that this step be done by one process expert. Generally every process expert has a personal philosophy of how everything works and this philosophy will guide this person in tuning the model(s). This person must be given sufficient time in order to carry out this task with sufficient attention to detail. This phase is the critical step in getting the software configured correctly. Mistakes and ommissions made earlier can be corrected here. Mistakes made in this phase will lead to problems down the road.

Phase 6 is the phase of change management in which the model(s) will be introduced into the everyday activities of the plant. This may require changing policies and procedures and getting appropriate management buy-in. It will definitely require convincing the operators to adopt and trust the model(s). To be useful, the software output should trigger some form of human activity. In the case of APO, suggestions must be implemented. In the case of IHM, alarms must be checked out and, possibly, maintenance measures conducted. Management as well as operators must discuss and agree on the procedures to be followed. This phase is the critical step in getting the software to be useful. We recommend that the process of user adoption be measured using objective numerical KPIs such as the number of suggestions implemented (APO) or alarms dealt with (IHM).

The entire process can be reasonably completed in three months. We recommend that the project team aim at a similar time-frame as experience has shown that project satisfaction and user adoption decreases with significantly longer project durations.

2. Installation

This chapter describes how to install the software to the point that it runs correctly. After installation, the software must be configured for its use case as described in the chapters on the individual solutions.

2.1. Prerequisites

The software application runs on a 64-bit Microsoft Windows operating system. We recommend Windows Server 2008R2 or later. The server must have sufficient disc space for the historical and future data. We recommend at least 1 TB of free disc space. The processor and memory can be standard such as an i7 processor and 16GB of RAM. If computation speed is an issue, this can be alleviated by a high-end graphical processing unit (GPU) by Nvidia as we can make use of the CUDA/CULA support. This is far more efficient than buying a high-end CPU.

We recommend that the server have a RAID system to prevent loss of data due to hard disc malfunction. We also recommend that a data backup be run on the database files on a regular basis to prevent system loss.

The firewalls between the OPC server and the application as well as the firewalls between the application and the office network of the users must be opened.

algorithmica must obtain a user account on the server with administrative privileges.

On the day of initial software installation, the server must have full internet access in order to properly install the Ruby-on-Rails environment. After this installation, the internet access can be removed forever.

In order to provide continued update/upgrade services, consulting services or customizations, algorithmica must be able to access the server remotely.

Here is a checklist

- Hardware

- At least 1TB free disc space

- Standard processor, e.g. i7 920 or better

- At least 16GB RAM

- Optionally an NVIDIA GPU

- Optionally a RAID system

- Optionally a regular data backup

- Software

- 64-bit Microsoft Windows operating system

- algorithmica user account with administrative privileges

- Opened firewalls between OPC server and the application

- Opened firewalls between the application and office network

- Internet access on day of software installation

- Remote access for algorithmica

2.2. Installation

The software installation is performed fully automatically by the installer program delivered to you by algorithmica technologies. There is nothing that you need to do other than provide the computer with an internet connection and execute the installer.

The installer will install the following programs

- PostgreSQL: The database management software that will hold all the data.

- pgAdmin: The administration console for PostgreSQL.

- Ruby: The language behind the user interface.

- Ruby on Rails: The framework in which the user interface is written.

- Bundler: The package manager for Ruby on Rails.

- Git: A versioning utility needed to obtain current versions of plug-ins.

- Node.js: A javascript library needed for the user interface.

- Sqlite: A database management software that will not be used but is an integral part of Rails.

- SQL Server Support: Necessary Rails routines to allow connectivity to the database.

- Rails DevKit: Necessary Rails routines to be able to compile some plug ins that are delivered in code form.

- Gems: A large number of extensions to Ruby on Rails known as gems.

- AI: The machine learning software by algorithmica technologies that performs the analysis behind the applications.

- Softing OPC: The OPC client used to read live data uses the OPC toolkit produced by Softing AG for which algorithmica has a developer license.

All of these programs are licenced in a version of either the MIT, BSD or GNU LPGL licences. As such they may be used for commercial purposes without payment. All of these programs are used only for the purpose of storing data and presenting a user interface. All mathematical analysis is performed using software written by algorithmica technologies without the use of any third-party components.

The installer creates a new user account on the computer with the name "postgres" with password "admin". This account is important and will be used by the database server utility. Within the database, the installer will create a new user with the name "viewer" and the password "viewer".

2.3. Network Setup

The software is now installed locally. If you are going to test the software, then you do not need to continue with a network setup. If you plan to look at the user interface from other computers, then a network setup is necessary.

The user interface broadcasts its webpage on port 3000. In order for you to be able to look at the interface from a different computer, the other computer must be able to have access to the IP-address of the application computer and the application computer must be able to send its content back. This may require firewall settings to be changed. As this depends on the setup of your particular network, please check with your IT department. On the application computer, port 3000 must be opened to the HTML protocol for it to be able to send its content out.

2.4. Testing

Please verify the successfull installation by starting a browser and typing "http://localhost:3000". You should see a login page. This is the user interface. You may login as "admin" with the password "admin". If this login works, the software is correctly installed locally.

To verify that the network setup also works, please reload the page by typing in the IP address of the computer into the same browser, i.e. "http://[IP-address]:3000". If you end up at the same login page, then the network setup also works and you should be able to see the interface from any computer in your office network if you enter the IP-address into any browser. If this does not work, but the previous step worked, then a network setting must be changed. Please contact your IT department for help on this issue.

The installer will also setup a windows service for the interface. If the computer is ever restarted, then the interface will automatically start up together with the boot process of the computer. Thus, the interface will always be accessible when the computer is up and running.

This concludes the software installation.

2.5. Troubleshooting

Problems at this stage could have several forms:

- The installer terminated with errors. Please check that you have administrative priviledges on the user account of your computer from which you started the installer. Please check that your internet connection is working and not restricted in any way. Please check that your operating system is a 64-bit Microsoft Windows system.

- The installer finished without errors but the page "http://localhost:3000" does not display anything. Depending on your system, there may be a time delay of a few minutes between the end of the installation and the start of the webserver. Please wait up to five minutes after the installer is finished and try again. If the page still does not load, the webserver is not running. To start it manually, please start a commandline prompt, navigate to the install directory and execute the command "rails s -b [IP-address]" to start the webserver. Please give it two minutes to start up and check the webpage again. The webpage should now load normally or the commandline prompt will contain a feedback message telling you the cause of the problem.

- The page at "http://localhost:3000" displays fine but the page at "http://[IP-address]:3000" does not. The software and interface server work fine. The problem is in the routing to the IP address. Please consult with an IT network administrator regarding the opening of port 3000 for the HTML protocol.

- The page at "http://[IP-address]:3000" displays fine on the local computer but not on other computers. There is a problem in the network connectivity and most probably with the firewalls. Please consult an IT network administrator.

- Some other problem exists or one of the above problems persists. Please contact algorithmica technologies and ask for help. Please provide exact and detailed information about your system, what you have done so far and how the problem manifests. Thank you for your patience!

2.6. Data Requirements

Any analysis of data relies on the quality of the data provided. First and foremost, we assume that all important aspects of the process or equipment are included in the dataset. If important data is missing, then modeling may not work as well as it would if this data were included. On the other hand, it is not good to overload a model with a large number of unimportant measurements. The most important action for modeling is a sensible selection of tags to be included in the model. For an industrial plant with a complex control system, we can usually say that less than 10% of all available measurements are actually important for modeling the process.

However, in case of doubt, we recommend to include the measurement rather than exclude it. The reason for this is the same as in human learning. If you have irrelevant information, this is time-consuming and perhaps annoying but it will not prevent you from reaching understanding. However, if you lack important information, this may prevent you from achieving actual understanding. So it is best to err on the side of inclusion.

Most data historians have the policy of recording a new value for a tag only if it differs from the last recorded value by at least a certain amount. This amount is usually called the compression factor. This means that some measurements are recorded frequently and others very seldom. For mere recording this is a huge space saving mechanism.

For analysis and machine learning, we have to align the data however. For each time stamp, we must know the value of every tag at that time stamp. Most machine learning methods also require the time difference between successive time stamps to be the same.

In order to get from the usual historian policy to this aligned data table, we use the general rule that the value of a tag stays the same until we get a new one. A time-series may thus turn into something looking like a staircase. In most cases this data alignment leads to a growth in total data volume as there will be a number of duplicate values. This cannot be avoided however.

For this reason, we must choose a sensible data cadence. That is to say, the time difference between successive time stamps in this table must not be too small for reasons of data volume but also not too large so as to make the process dynamics invisible. The cadence must be chosen with care based on knowledge of the inherent time scale of the process that one wishes to model.

A dataset must have a beginning and an end. For training an IHM model, we must select the training data time period with care as we want to show a period of healthy behavior for the equipment in question. If we believe that external conditions, e.g. the seasons, matter for an accurate depiction of the process, then we need some data for these different conditions. For accurately modeling health on equipment, we usually find that a training time period of three to six weeks is sufficient. These weeks need not be consecutive. If we believe that seasons are important, we choose one week each in spring, summer, fall and winter. For optimization modeling e recommend a full year in order to include seasonal variations.

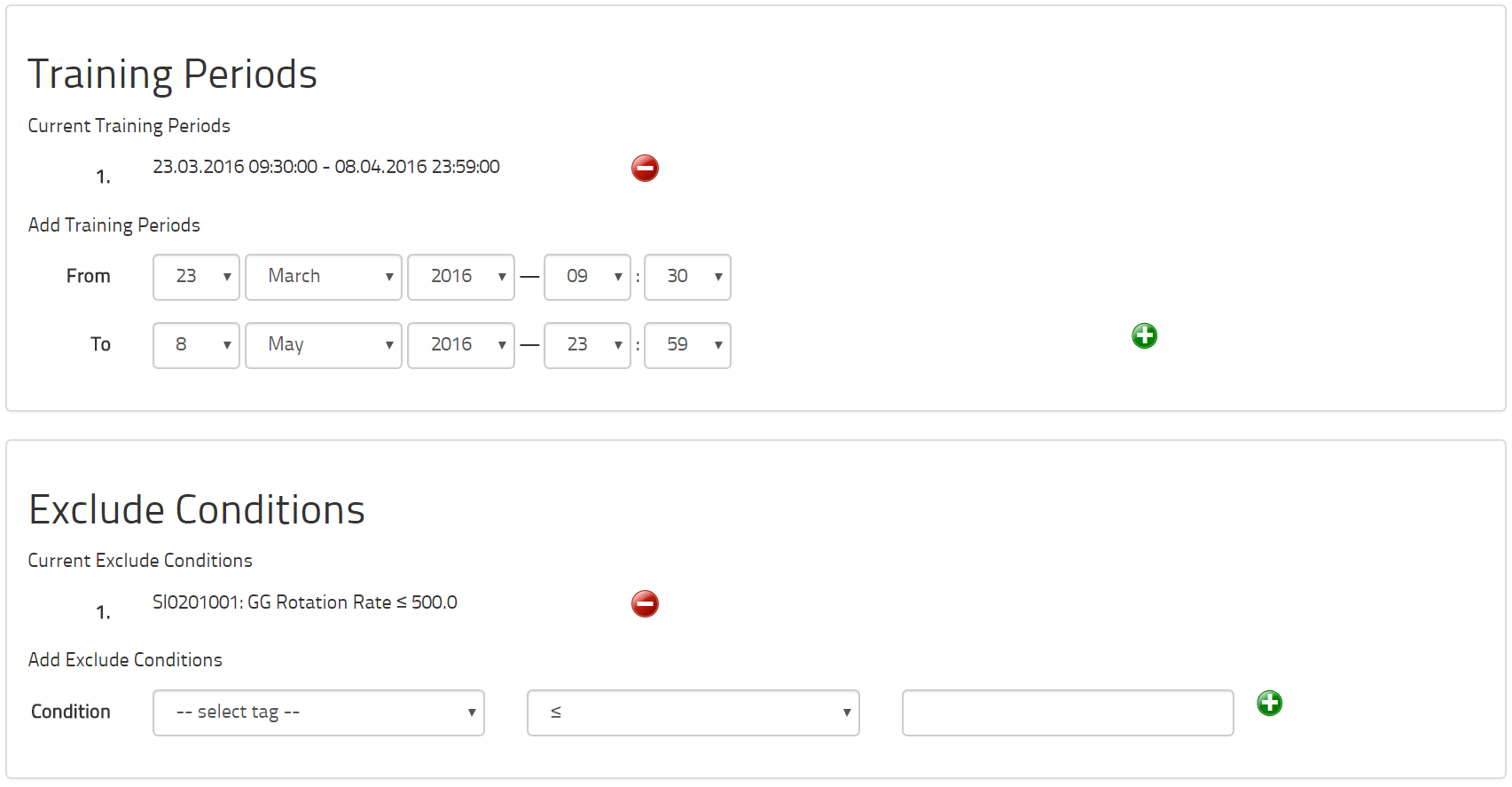

In the time periods used for training, we may have temporary conditions that should not be trained. If, for example, the equipment is turned off each night, we would not want to train the model during the hours that the equipment is not operating at all. For this reason, the software offers exclusion conditions based on the values of certain measurements. One might say for example that all data points where the rotation rate of the turbine is less than 500rpm must be excluded from modeling. The tags needed to make such judgments must be included in the dataset.

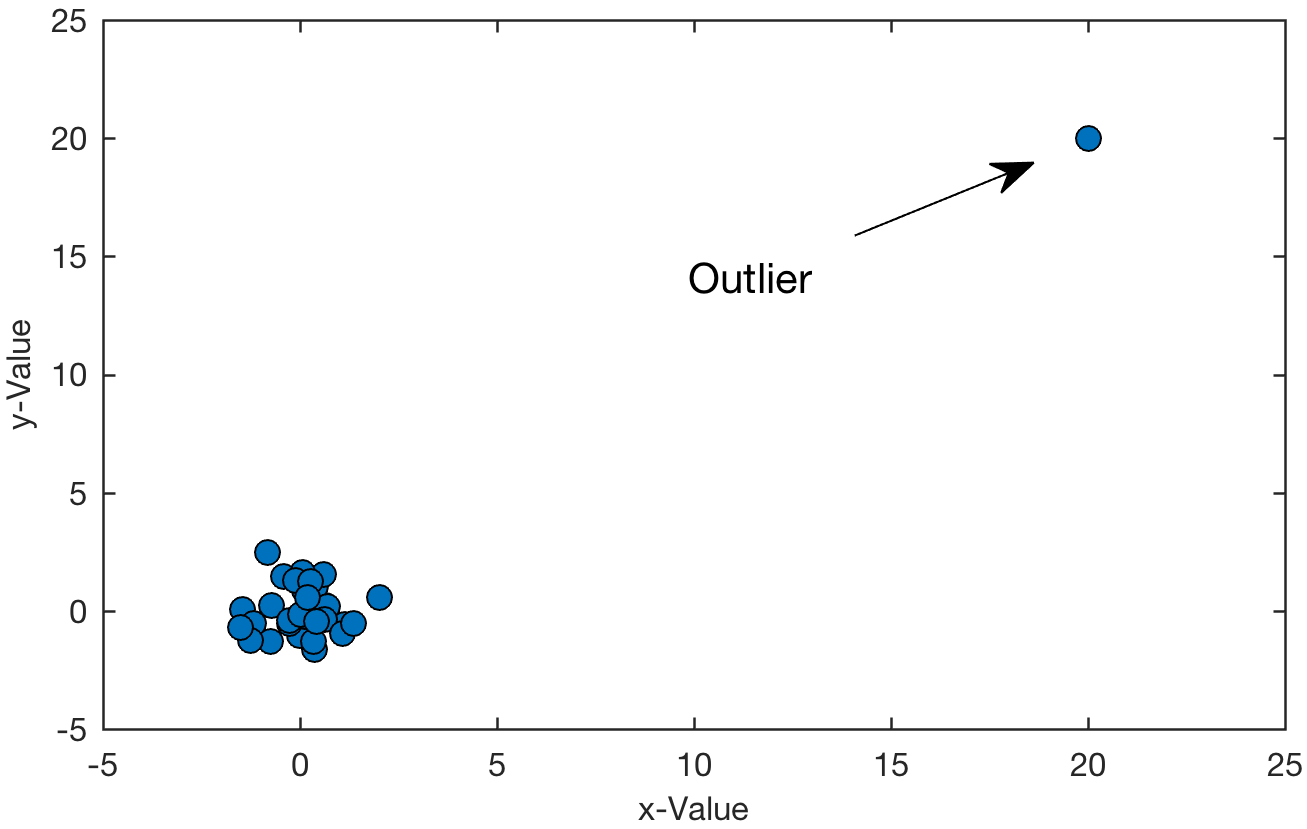

After these choices are made, the tags must be made known to the modeling system by providing some basic information about them. Apart from administrative information such as their names and units, we must know a few more facts. As measurement errors do occur in practice, we must know the range of measurements that are allowed so that a very low or very high measurement can be identified as an outlier and excluded. We need to know the measurement uncertainty so that we can compute the uncertainty in the result of the analysis; for more information about this, please see the section on mathematical background. For IHM, we need to know which of the measurements are to receive dynamic limits and are thus to be alarmed. In practice, most of the measurements in the model will not be alarmed but only used to provide context and information for those measurements that should receive alarms. For APO we must know which tags can be controlled directly by the operator and which cannot be controlled at all.

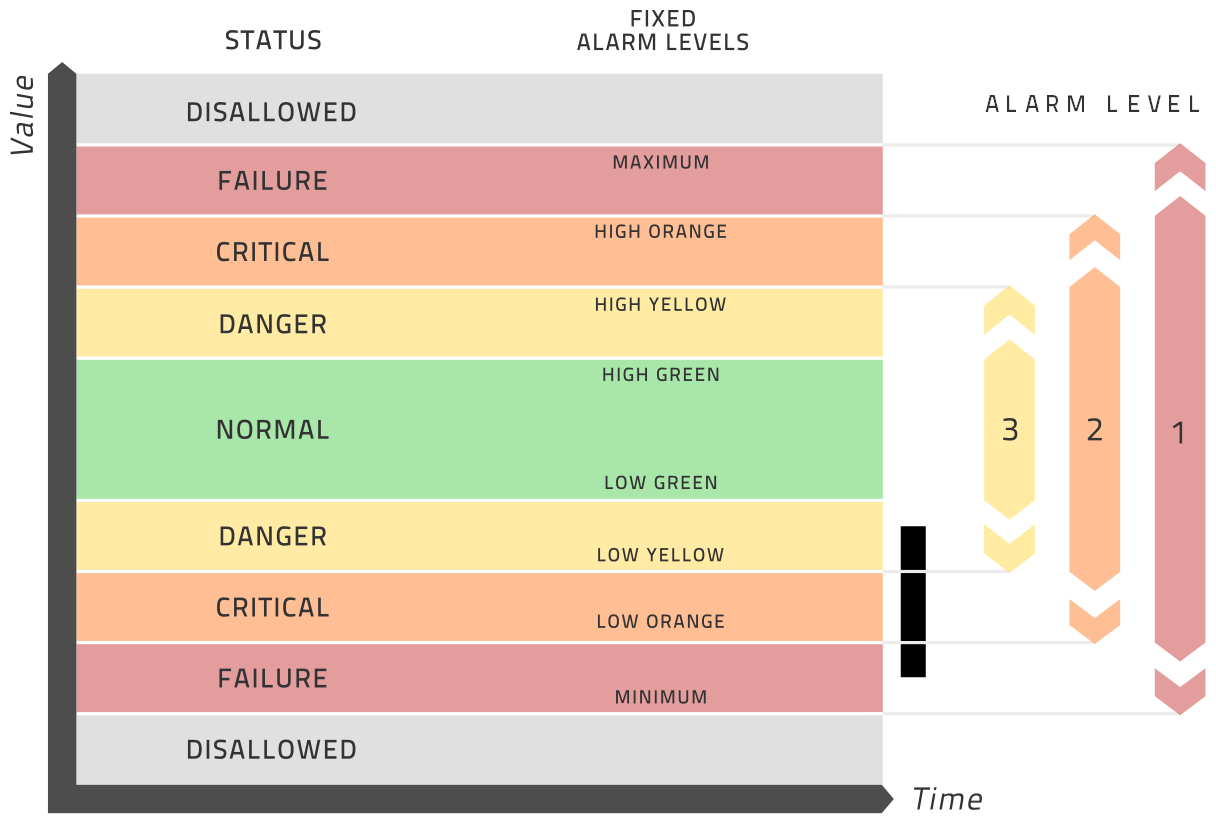

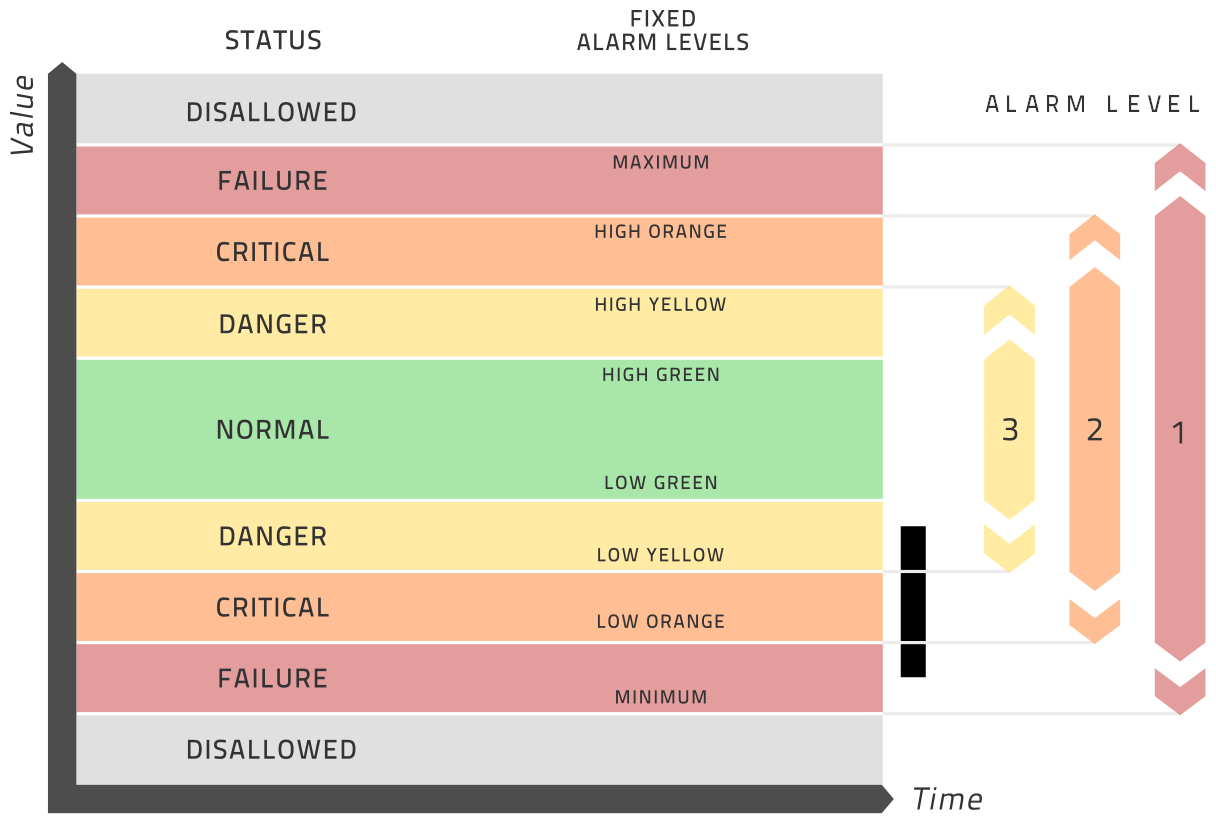

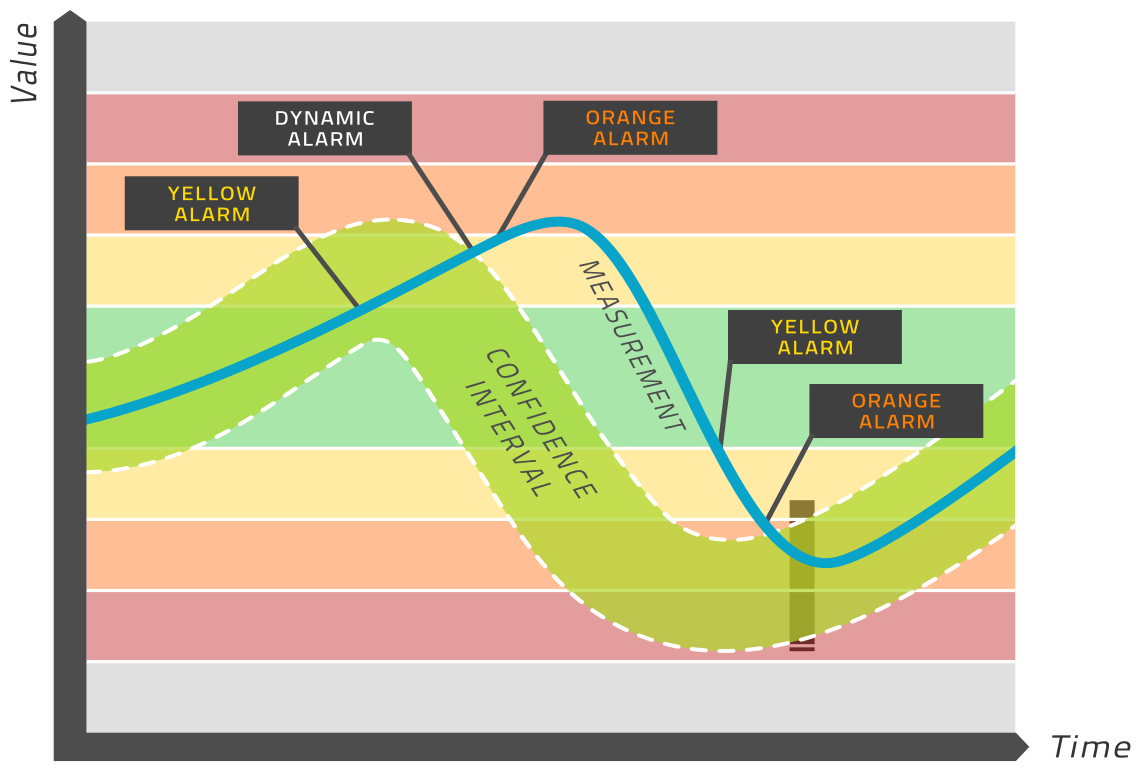

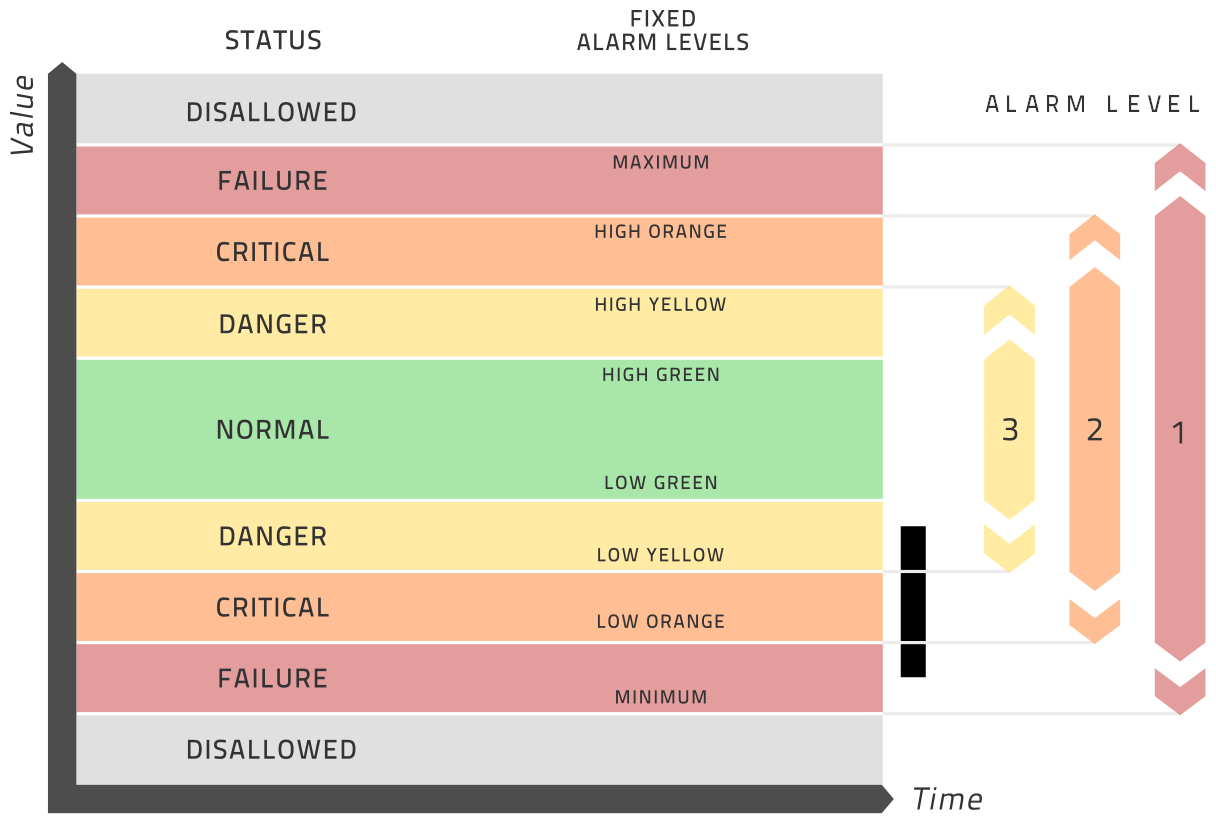

Optionally, you may specify up to three static alarm ranges for each measurement. This is provided so that IHM supports traditional condition monitoring in addition to its dynamic limit approach. This analysis and alarming is separate from the modeling analysis and thus totally optional. These ranges are best explained in the diagram below.

In summary, these are the initial choices to be made in relation to your dataset:

- Which tags are to be included?

- What data cadence is to be used?

- What time period will the dataset have?

- What are the time periods to be used for training?

- What exclusion conditions should be applied?

- For each tag …

- What is the allowed range of values?

- What is the tag uncertainty?

- Is this tag to be modeled and alarmed?

- Can this tag be directly controlled by the operator?

- Optionally, what are the static alarm ranges for yellow, orange and red alarms?

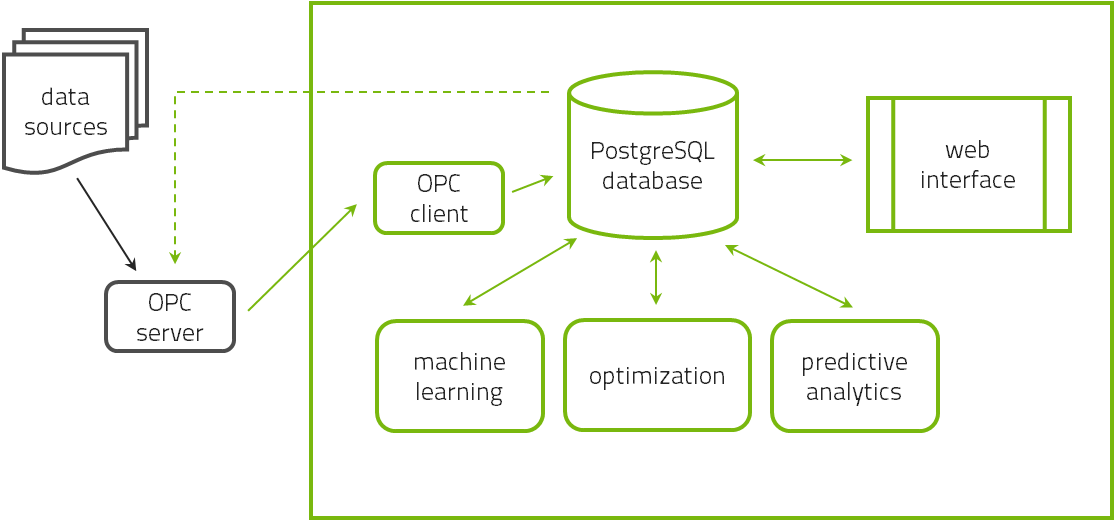

2.7. Software Architecture

The software has three major components. The central database that stores all data and model information is a PostgreSQL database. The data is analyzed by a command-line executable that reads data from the database and writes its results back to the database. The user interacts with this system via a browser-based graphical user interface written in Ruby-on-Rails.

All three components are typically installed on a single computer-server on the premises of the users’ institution. All users can access the interface via any web-browser on any device connected to the intranet of the institution provided that the appropriate firewalls allow this access. As the software is installed on the premises, this is not a cloud service and the data remains on site. There is thus no security threat either by loss of proprietary data or hacking attacks.

The three components may be installed on different servers if the user desires to optimize the database server for disc space and the computational server for computation speed but this is not necessary.

Initially, the historical data is expected to be provided in the form of a file. The reason is that a data export followed by a data import has been found to be far more efficient rather than a direct data connection via e.g. OPC-HDA.

The regular reading of real-time data is done via OPC-DA. For this purpose, the name of the OPC server must be specified (an example is opcda:///Softing.OPCToolboxDemo_ServerDA.1/{2E565242-B238-11D3-842D-0008C779D775}) and any intermediate firewalls must be appropriately opened. Also, the full item name of each tag in the OPC server must be specified to be able to read the data.

3. How-To

This chapter describes various practical tasks independently of each other:

- Define the plant. In order to create a model, a plant must be setup and defined.

- Data preparation. The most essential task in advanced analytics is to prepare and describe the data to be analyzed.

- OPC connectivity. In order to analyze data in real-time, it must be connected to a data source by OPC.

- Bring Online. For real-time analysis, the application must be brought online.

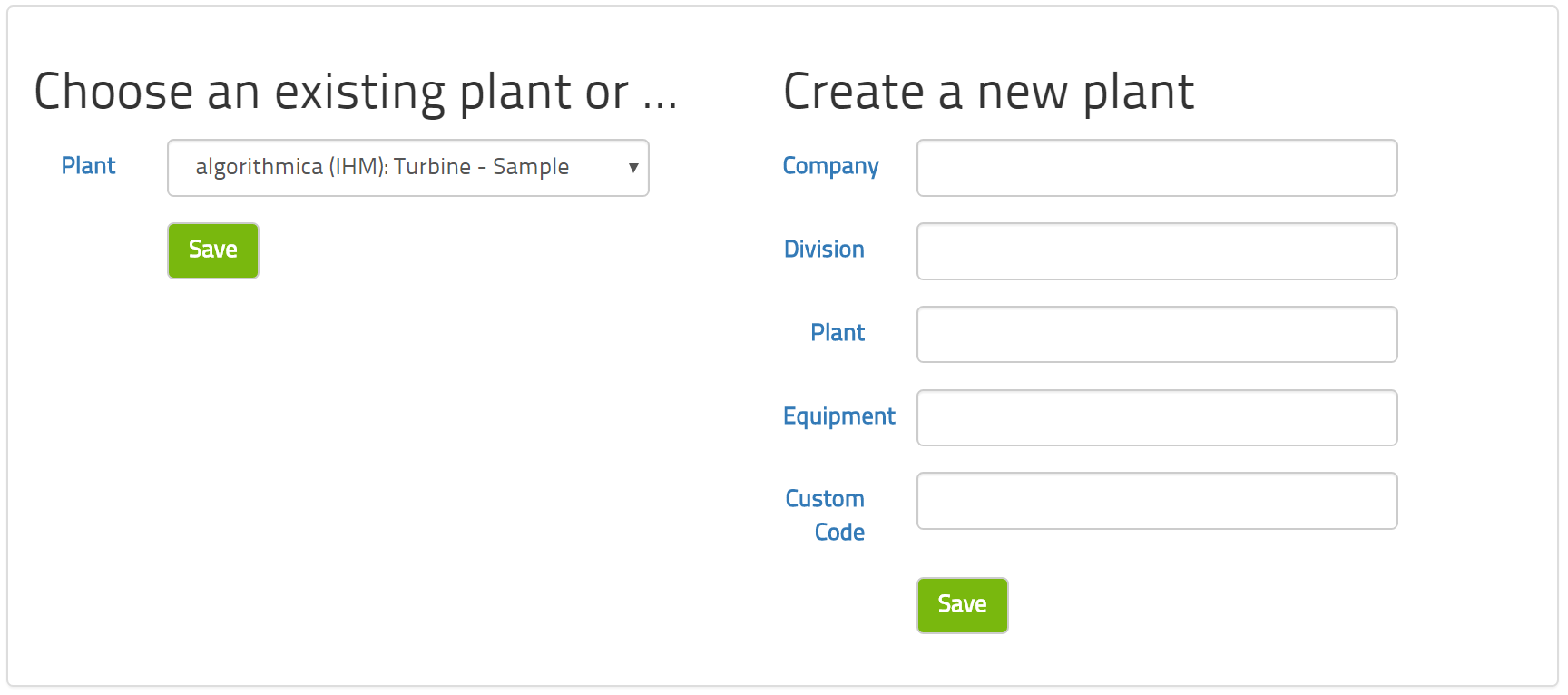

3.1. Define your Plant

The plant is the entity that defines your data set. In order to construct a model, the first thing to do is to create a plant object for it.

In order to refer to the plant, you have four text fields available: company, division, plant and equipment. These are just labels so that you can organize several models in one user interface and manage user permissions to see individual models. You can also use the comment field to leave a longer note explaining what this model is about. During a testing or tuning phase, you may wish to construct more than one model in order to be able to compare them. The comment field is useful to keep track of which model was built how.

The custom code is a five-digit number provided to you by algorithmica technologies when you purchased the software licence for this plant. It's purpose is to identify any custom analysis steps that were written for you and included in the analysis software. If you are testing the software, you will not have this code and you may leave this field blank.

The maximum lag time is relevant only for use with the intelligent health monitor (IHM). The selection of independent variables used by IHM may be done automatically and may optionally include a time-delay. This option specifies the maximum time delay in number of time steps for this possibility. See the description of IHM modeling for more details.

The three fields OPC name, OPC DA version and subscription frequency are relevant for the OPC connection to a data source. This is relevant only for using the software in real-time. Please see the how-to section on bringing the system online for more information.

The three time periods that define the start and end of the reference time period and the start of the operational period are concepts used by APO to assess the success of the suggestions. The reference period is the time frame for the data used to build the model and the operational period begins when the suggestions made by APO are actually implemented.

3.2. Prepare Your Data

Machine learning works by analyzing historical data to find some pattern and to store that pattern in a mathematical model. For this to work, the methods require both the historical data itself and some extra information about the data. This section will describe how to prepare all this so that the learning can take place.

The situation that we want to model will generally have many properties that are measured by sensors and that we can include in the model. The first step is to choose which of the available sources of data will be included in the analysis. As a second step, each of these data sources needs to be characterized with some essential information such as its allowed range of values. The third and last step is to prepare a table of historical values for each of the data sources.

With all of this data in place, the analysis and learning can begin.

3.2.1. Select Tags

To start your modeling experience, please choose the tags to be included in the model. Your plant will have many sensors installed that measure a myriad of properties. Many of these will not be relevant for modeling. To guide your selection, please consider the following guidelines:

- Any tags that the operators regularly modify should go into the model.

- Any tags that represent important boundary conditions for the process should go into the model.

- Any tags that have relevance to security, quality, efficiency and monetary value should go into the model.

- Any tags that are used only during start up, shut down or emergency procedures are probably not relevant for modeling normal operations.

- Any tags that are used for condition monitoring or similar purposes are not relevant for optimization modeling.

As a rule of thumb, up to 90% of process instrumentation is irrelevant for modeling. In most cases, a process model only requires a few hundred tags even though many thousands may be available.

When in doubt, it is better to include the tag in the model. Too much data is always better than too little data. However, the model will be unwieldy and more time consuming to set up with an increasing number of tags and so it is in your best interest to keep the number of tags relatively low.

3.2.2. Prepare Meta-Data

When you have identified the tags you want to include, next you need to define some information about each tag. Most of these are straightforward.

There are two ways in which you can prepare this data:

- You can enter or edit the tag information directly through the interface by clicking on

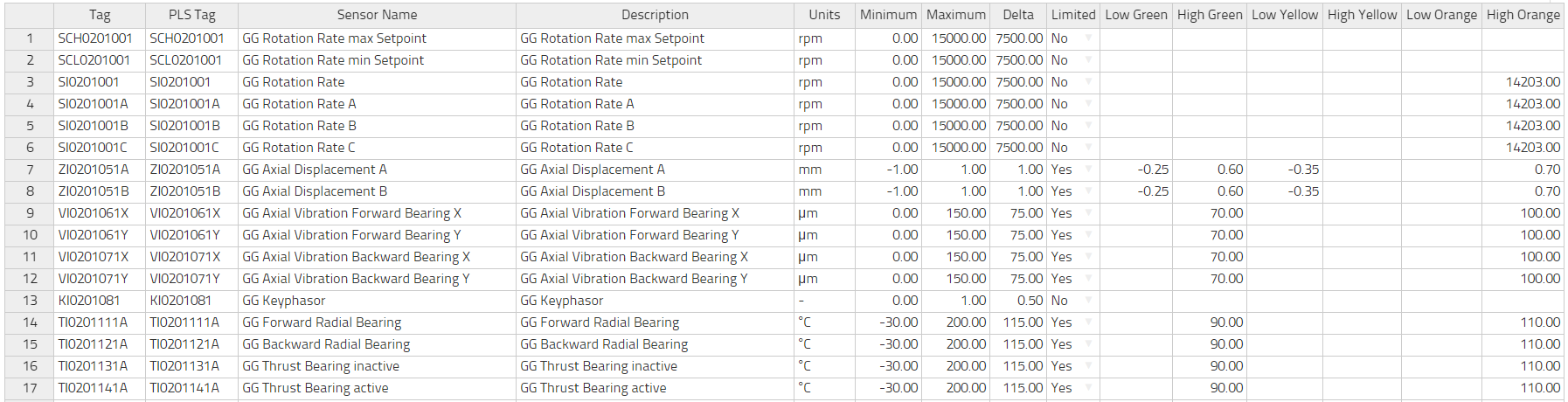

plant -> edit tags. The form allows you to add tags or to edit the information for each tag. Please do not forget to save your work! This is the most convinient way to define this information. Please note that this table supports copy and paste but please be careful when using copy and paste for large amounts of information as there may be misalignments. - You can prepare a file that holds the information and is uploaded into the database in one go. This file will be a text file containing 16 TAB-delimited columns. If normal ASCII characters are sufficient, this file may be saved as ASCII but if non-ASCII (for instance the German umlauts ä, ö, ü or certain unit symbols such as ° or µ) characters are needed, then it should be saved in UTF8 encoding. This file may have a header row with the names of the columns in it.

Here follows a brief explanation of each column and whether it is required or not

| Column Name | Requirement | Description |

|---|---|---|

| tag | required | The unique identifier for a time-series. This is often an alphanumeric string of characters used in the DCS or data historian to label a tag. |

| pls_tag | required | Often the same as the column "tag," this is the unique identifier used by the OPC server. algorithmica uses this column in order to query the current value of the tag from the OPC data source. It must therefore include any and all OPC item information. |

| sensor_name | recommended | A short description of what this tag is. |

| description | optional | A longer description of what this tag is. |

| units | required | The physical units of the tag, e.g. "°C" |

| minimum | required | The smallest value allowed. Any values lower than this will be ignored as not physically possible. For a detailed discussion on the concept of minimum and maximum see their section in the chapter on terminology. |

| maximum | required | The largest value allowed. Any values larger than this will be ignored as not physically possible. |

| controlability | required | Determines whether the tag can be directly controlled by the operator, not controlled at all, or indirectly controlled. |

| low green | optional | Within the range of allowed valued [minimum, maximum] we may define three sets of fixed alarm levels from the inside out: green, yellow and orange. Please see the image below for better understanding. |

| high green | optional | |

| low yellow | optional | |

| high yellow | optional | |

| low orange | optional | |

| high orange | optional | |

| delta | required | The measurement uncertainty of this tag in the same units as the value itself. If you are setting this up for the first time, please read the more detailed explanation of this concept in the chapter on terminology. |

| limit | required | This column is either "TRUE" or "FALSE" depending on whether this tag is to receive a dynamic limit from IHM or not. |

Columns that are not required, may be left blank. In particular, optimization by APO does not consider the colored limits. A sample file is provided with the installation files.

3.2.3. Prepare Process Data

The data file is an ASCII text file with its first column being a timestamp and then more columns of floating point numbers, one for each of the tags in the model. This file is semicolon-delimited. This file may have a header row with the names of the columns in it. It is essential that the order of the column in the data file is the same as the order of the rows in the tag file.

The timestamp may be in one of two formats:

- The standard European time format: dd.mm.yyyy hh:mm:ss.xxx

- The ISO 8601 format: yyyy-mm-dd hh:MM:ss.xxx

In both cases, the use of milliseconds '.xxx' is optional.

Here is a sample file that is semi-colon delimited.

| Time | CHA01CE011 | LAE11CP010 | LAE11CT010 | LBA10CF001 |

|---|---|---|---|---|

| 01.01.2015 00:00:00 | 1.1 | 2.1 | 3.1 | 4.1 |

| 01.01.2015 01:00:00 | 1.2 | 2.2 | 3.2 | 4.2 |

| 01.01.2015 02:00:00 | 1.3 | 2.3 | 3.3 | 4.3 |

| 01.01.2015 03:00:00 | 1.4 | 2.4 | 3.4 | 4.4 |

A sample file is provided with the installation files.

3.3. Setup OPC Connection

In order to update your model in real-time, the model must have access to the process data via an OPC interface. We assume that your control system, archive system or other data source provides an OPC server. algorithmica provides an OPC client in order to read this data.

The basic data of a plant (click on plant -> edit) includes three fields relevant to the OPC connection:

- OPC Name. This is the full name of the OPC server in your network. A sample name is opcda:///Softing.OPCToolboxDemo_ServerDA.1/{2E565242-B238-11D3-842D-0008C779D775}.

- OPC DA version. OPC includes several protocol types. algorithmica applications make use only of the DA type, which means data access. It is used to query the most recent value of a tag only. This protocol is available in version 2 or 3. Please specify which version your OPC server supports.

- Subscription frequency (sec). This is the number of seconds that we should wait before quering a new value. Please choose this number with great care. The application will query every tag at this frequency, store the values in the database and perform the model computations. Choosing a low number will not only strain the computational resources of your network but it will probably not achieve practical benefits as the human reaction time may not be sufficient to cope. Choosing a very large number may cause a significant time delay between the physical effect modeled and any possible reaction. In case of doubt, we recommend choosing a time period somewhere in the range of 300 to 900 seconds, i.e. 5 to 15 minutes. Ideally this value is the same as the data cadence chosen in the historical dataset.

If you have not done so already, please input these values into the interface and save them. We assume that you have already provided each tag with the field "PLS tag", which is the full OPC item name of that tag. The combination of the information of the OPC server and the OPC item of each tag allows the application to read the values.

Please note that the application computer must be able to access the OPC server over the network. This may require changes to be made to the firewalls in your network.

In order to check that the OPC server can be reached and that the items are read correctly, please go to plant -> OPC diagnostic. This form should display some basic information about the OPC server and also the current value of each tag. If there is a check mark next to the server status and each tag, then the connection is good and the item names have all been found. If there is an X next to server status, then either the server name is wrong or it cannot be reached on the network, please check with your network administrator. If there is a check mark next to server status but some tags have an X next to them, then their item names cannot be found on the OPC server. Please check these item names and correct them by going to plant -> edit tags

Having successfully provided all this information and checked it to be correct and working does not turn real-time computing on. You may turn real-time computing on and off independently of providing all the information required for its use.

3.4. Bring Application Online/Offline

In order to determine whether your application is currently running in real-time or not, please go to plants -> OPC on/off. The status displayed there will inform you whether the process is currently operating, when the last data point was read and what the update frequency of reading is set up to be.

Due to network delays and temporary communication breakdowns across computer networks, we have adopted the following definition of whether the OPC data connection is online: If the last data point read is at most two times the update frequency in the past, then the connection is considered online. If the last data point read is older than that, then it is considered offline.

Depending on the status, you will find a button to turn real-time processing on or off. After clicking on this button, please allow some time to pass before the connection is either established or severed. This may take several minutes depending on your network. By updating this page, you will eventually see the change in connection status.

This page also offers you the option to consult the OPC log file. This keeps a record of major activities on your OPC connection. There is usually no reason to consult this file unless there is a problem with the connection.

4. Advanced Process Optimizer (APO)

Operating a plant is a complex task for the operators who have to make many detailed decisions every few minutes on how to modify the set-points of the plant in order to respond to a number of external factors. The plant must always deliver what its customers demand and it must respond to changes in the weather and the quality of its raw materials among other things.

Plants are operated in shifts. A frequent observation during a shift change is that the new shift believes that it knows better than the previous shift. Therefore, a number of set-points are modified. The plant, due to its size, may require a substantial period of time to reach equilibrium after this happens. Some eight hours later, at the next shift change, the scene is repeated. As a result, the plant is rarely at the optimal point.

Not only is this true because of the divergent beliefs of the various shifts. It is also due to information overload as a plant may have ten thousand sensors that cannot possibly be looked at by human operators. So each operator must, by training and experience, decide which few sensors to use for their decision making. Those few sensors provide a lot of information but not all of it.

Automation and control systems are usually local and designed from the bottom up. They do well on encapsulated systems such as a turbine, furnace, boiler and such. An overarching methodology is very difficult for these systems and seldom considered. It is in the interplay of all the components of a plant that the potential for optimization lies untapped.

So let us consider the entire plant as a single complex system. It is a physical device and thus obeys the laws of nature. Therefore, the plant can be described by a set of differential equations. These are clearly very complex but they exist. This set of equations that fully describes the plant is called a model of the plant. As the model represents the physical plant in every important way, this model is often called a digital twin of the plant.

There are two basic ways to obtain a model. We might develop it piece-by-piece by putting together simpler models of pumps, compressors and such items and adding these into a larger model. This approach is called the first-principles approach. It takes a long time and consumes significant effort by expert engineers both to construct it initially and also to keep it up-to-date over the lifetime of the plant.

The second way to get a model is to start with the data contained in the process control system and to empirically develop the model from this data. This method can be done automatically by a computer without involving much human expertise. It is thus quick to develop initially and is capable of keeping itself up-to-date. This approach is called machine learning.

4.1. Process Optimization

The purpose of machine learning is to develop a mathematical representation of the plant given the measurement data contained in the process control system. This representation necessarily needs to take into account the all-important factor of time as the plant has complex cause-and-effect relationships that must be represented in any model. These take place over multiple time scales. That is to say that the time from cause to effect is sometimes seconds, sometimes hours and sometimes days. Modeling effects at multiple scales is a complicating feature of the data.

The model should have the form that we can compute the state of the plant at a future time based on the state of the plant in the past and present. This sort of model can then be run cyclically so that we can compute the future state at any time in the future.

The state of the plant is the full collection of all tags that are important to the running of the plant. Typically there are several thousand tags in and around a plant that are important. These tags fall into three categories. First, we have the boundary conditions. These are tags over which the operators have no control. Examples include the weather and the quality of the raw materials. Second, we have the set-points. These are tags that are directly set by the operators in the control system and represent the ability to run the plant. Third, we have the monitors. These are all the other measurements that can be affected by operators (as they are not boundary conditions) but not directly (as they are not set-points) and so adjust themselves by virtue of the interconnected system that is the plant as the boundary conditions or set-points change over time. A typical example is any vibration measurement. This data is used by machine learning to obtain a model of the plant's process.

Having gotten the model, we want to use it to optimize the plant's performance. Here we need to agree on a precise definition of performance. It could be any numerical concept. Sometimes it is a physical quantity such as pollution (e.g. NOX, SOX) released, or an engineering quantity like overall efficiency of the whole plant, or a business quantity like profitability. We can compute this performance measure from the state of the plant at any point in time.

So now we have a well-defined optimization task: Find the values for the set-points such that the performance measure is a maximum taking into account that the boundary conditions are what they are. In addition to the natural boundary conditions (e.g. we cannot change weather) there could be other boundary conditions arising from safety protocols or other process limitations.

This is a complex, highly non-linear, multi-dimensional and constrained optimization problem that we solve via a method called simulated annealing. The nature of the task requires a so called heuristic optimization method as it is too complex for an exact solution. Simulated annealing has some unique features. It converges to the global optimum and can provide a sensible answer even if the time is limited.

The procedure in real-life practice is that we measure the state of the plant every so often (e.g. once per minute) by pulling the points from the OPC server and then update the model, find the optimal point, and report the actions to be performed on the set-points. This can be reported open-loop to the operators who then implement the action manually or closed-loop directly to the control system. As soon as something changes, e.g. the weather, the necessary corrective action is computed and reported. In this way, the plant is operated at the optimal point at all times.

4.2. Correction and Validation Rules

When the machine learning algorithm learns the model, it retrieves the historical data from the database point-by-point. Each point is first corrected and then validated. Only valid points are seen by the learning method and invalid points are ignored.

Think of a valve and the tag that measures how open that valve is. A fully open valve records 100% openness and a fully closed valve records 0% openness. As a result, the minimum and maximum values for this tag are 0 and 100 respectively. However, we find in practice that due to a variety of reasons we do get numbers stored in the database that are a little below 0 or a little above 100. These are not really measurement errors or faulty points. We should not throw this data away but correct this data to what we know it to be. A measurement below 0 is actually 0 and a measurement above 100 is actually 100. We know this by the nature of the thing being measured and so we use a correction rule. Correction rules are rules that change the numerical value of a tag to a fixed value, if the value recorded is above or below some threshold. By default, there are no corrections applied to the data. If corrections are necessary, you must specify them manually.

The machine learning algorithm should only learn operational conditions that are reasonable in the sense that it would be ok if the model were to decide to bring the plant to that condition in the future. Any exceptional conditions that the plant was actually in at some point but should not really get into again, ought to be precluded from learning. Also any condition in which the plant is offline, not producing, in maintenance or any other non-normal condition ought to be marked invalid. A validity rule requires that a certain tag be within specified limit values. By default, every tag must have a value between its minimum and maximum for the point to be valid.

In addition to the default validation rules, we may manually add others. These additional rules do not have to always apply and so we can add a specification that this rule only applies when some tag is above or below a certain value. This applicability feature allows you to enter more complex rules depending on conditions. For example you could formulate the requirement that the process have a high temperature at full-load and a low temperature at half-load.

4.3. Goal Function

The purpose of process optimization is to tell you what set-points to modify in order for your plant to reach its best operating point. In order for this to be possible, you must define what you mean by best. Any numerical quantity will do, e.g. efficiency, yield, profit and so on.

APO will maximize the goal function and so it is important how you formulate your goal. If you want APO to minimize some quantity, e.g. cost, then all you need to do is to put a minus sign in front. Then maximization will turn into minimization.

All pieces of information needed to evaluate the goal function must be available in the database. Let's take the example of the revenue due to the sale of electricity. On the one hand, we need a tag that measures the amount of electricity produced. That is usually part of the process model anyway. We also need, however, the financial value of one unit of electricity sold. This is generally not part of the process model. In order to include it as part of the goal function, you must include this tag in the database as well. Please note that financial prices are generally tags of their own as prices do tend to change over time. That is to say, prices are generally not static numbers.

The goal function of APO is the sum of as many terms as you like with each term having three elements that are multiplied together:

- The factor is a number that is intended to convert any units that might need converting or to include any static multiplier needed for chemical reasons. It also offers the possibility to include a minus sign so that this term is subtracted instead of added to the goal function.

- The first tag is the main element of this term.

- The second tag is optional. Its main use is for a goal function that computes profit and so we need to multiply volume by price in every term.

The input form allows you to specify a time period over which to evaluate the goal function's terms and add them up to their total. This is just for checking and will not influence the model in any way. Please use this feature in order to check the plausibility of the values. In practice, we often find that tags are recorded in different units than expected (for example tons instead of kg) and this causes rather large deviations in numerical values. In addition, sometimes a corrective factor is needed for the molar mass of a substance.

Each tag used in the goal function should ideally be semi-controllable. If a tag is controllable, then the optimizer can simply set it to its maximum value. If a tag is uncontrollable, there is nothing that can be done about that aspect of the goal anyway. This is a guideline and not a strict requirement however. If it is necessary, for the correct computation of profits, for example, to include some uncontrollable tags, then that is necessary.

The goal function is the centerpiece of APO that guides every decision. This must be specified with great care. If this function corresponds to your true business goals, then APO will get you there.

4.4. Train the Model

Training the model is an automatic process. You can trigger the training of the model either via the APO Wizard or via the APO Menu by selecting the Model option.

The form will ask for you to select the method you would like to be applied. Both choices will delete the current model and create a new one from the historical data. Method Model only will just do that and nothing more. It will therefore keep all the suggestions already made and all the manually entered reactions from the operators. This option is the right one for you if you have had APO in active use for some time and you merely want to update the model but retain the suggestions made in the past. Method full recomputation will also delete all suggestions made along with any manual reactions to suggestions. It will then recreate them all after the model is finished. This is the right option to choose if you are deploying APO, using it for the first time or in the process of fine-tuning the model.

The time limit can be used to speed up the recomputation of all historical suggestions by only computing them from the begining of known history to the specified point in time. Generally, we do not recommend using this option and recommend modeling the entire dataset.

Please note that training can take a substantial period of time. The amount of time is directly and linearly proportional to the number of data points in the database. The process is dominated by the read/write speed of database operations and thus the speed of the hard drive. Please be patient.

At the end of training, the model is saved in the database. If you have selected full recomputation, you will be able to look at the newly computed suggestions and assess model quality. We strongly recommend that you do so.

4.5. Assess Model Quality

There are three steps to assessing the quality of a trained APO model:

- Checking plausibility

- Reading the model report

- Fine-tuning the model

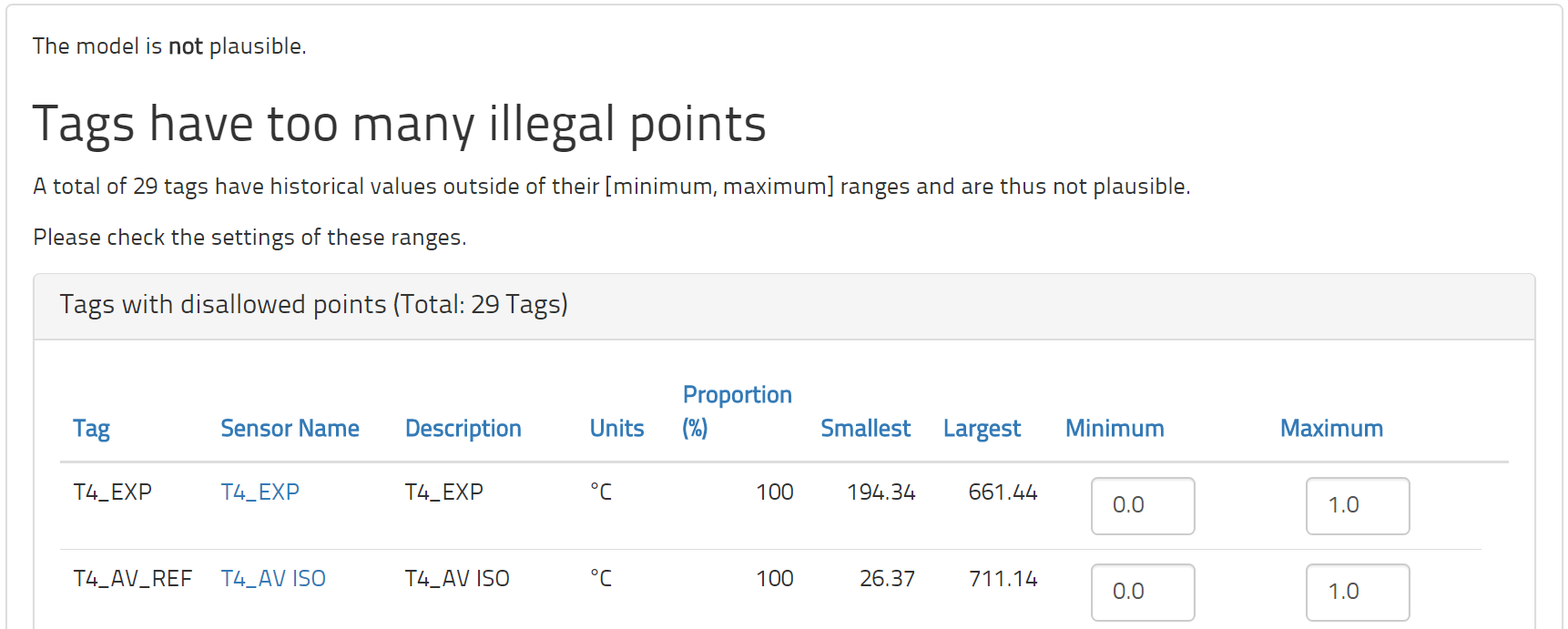

The model plausibility looks at the historical data in relation to the specifications made for each tag and tries to assess if these specifications make sense. The first thing checked is the measurement range of each tag. From the historical data, it is computed how many points lie inside and outside of the interval from minimum to maximum. This interval is intended to mark any and all normal and reasonable values. Therefore, only a very small minority of historical observations should lie outside this interval. However, we frequently find that some tags have a great many points outside this interval.

The minimum and maximum values are important because any point outside this allowed range is excluded from modeling. Generally, these values need adjustment before enough valid points are available to train a sensible process model. In the plausibility form, you will see not only the percentage of historical points that lie outside this interval but also the smallest and largest values ever measured for this tag. This information should help to guide you in making the appropriate corrections.

The second check concerns the measurement uncertainties of the uncontrollable tags. The modeler clusters the available historical data on the basis of the measurement values and uncertainties of the uncontrollable tags. For each such cluster, a separate optimization model is built. This is done in order to avoid suggesting a operational condition that cannot be reached because one or more of the uncontrollable tags are different. As they are uncontrollable, they mark boundary conditions to the process. The more tags are marked as uncontrollable and the smaller their uncertainties are, the more restrictions are put on the model. A more restrictive model will be able to optimize less.

In this check, please examine the selection of uncontrollabel tags. Perhaps some of them can be removed from the model if they do not really represent a boundary condition. Please also have a look at the uncertainty of these tags. The plausibility form will tell you how many clusters are formed on the basis of this tag. If in doubt, please start with larger uncertainty values to obtain a reasonable model. This can always be lowered at a later time to make the model more restrictive.

The third plausiblity check concerns the validity of historical data. Only valid points are used for training. A point is valid if all controllable tags are within their minimum to maximum ranges and if all validity rules are satisfied. You may also have defined a number of custom conditions that must be met. A common such custom condition is to require the plant to achieve a steady-state for validity. This effectively excludes all transient states from learning. If we have very few valid points in the history, then there may be too little data to learn from. As a plant's operations should generally be normal, having few valid points indicates that some settings need correcting.

After these three checks have been passed, the machine learning should be able to train a reasonable model for your process. Please train the model and have a look at the model report next.

4.6. Understand the Model Report

After you have trained the model, you can have APO generate a model report. This is either the last step in the APO wizard or you can get to it via the APO menu choosing the report option. The report generator asks for a time period and a granularity of the report. By default, the time period is set to the entire time period for which data is in the database. The report will be compiled for that time period and statistics will be collected with the frequency specified by the granularity.

The report itself contains an explanation of its data tables and diagrams. The report is mainly concerned with analyzing the optimization potential provided by APO. Every suggestion provides an opportunity to improve the plant's performance. If that suggestion is implemented, then that opportunity is realized as a actual gain. These opportunities and realized gains are summarized in the report. Please check that the magnitude of improvement is realistic. If the improvement is very large, the model may not know about all the restrictions or boundary conditions that the process has. If the improvement is very small, perhaps the restrictions on the model are tighter than they are in reality. A more detailed analysis of the suggestions themselves follows in the process of fine-tuning but an assessment of the rough numerical size of improvement should be done now using the report.

The report will also contain a list of the most common suggestions made. A single suggestion may contain several lines of changes to be made. Each of these changes is averaged out here and sorted according to how often they appear. You will see how often tags are changed and by what average amount they are changed. This average is supplied with a standard deviation so that you can see how much variation about this average takes place. Tags that appear in nearly every suggestion probably have an uncertainty that is too small and thus make nearly every value sub-optimal. Tags that appear in hardly any suggestion may not have a great influence on the process and perhaps should be ignored. On the other hand, they may have an uncertainty that is too great as to hide the true influence they may have. If the changes are often very large, perhaps there are limiting factors that must be imposed on the model.

4.7. Fine-Tune Your Model

After you have built a model and it is essentially sound on the basis of the tag settings, plausibility check and the model report, it is time to perform model fine-tuning. This is a process that may take some time and involves looking at many individual suggestions but will eventually make the model ready to be used in practical work and will also provide the confidence to use the model.

In the APO menu, please choose the option of fine tuning. To first begin fine tuning for a plant, please click on the button Initialize. This will select 100 randomly chosen historical suggestions for your review. These will then be listed in the fine tuning overview. You can restrict the list by using the form and clicking the button Filter. Filtering may be done by either the time of the suggestion or whether this suggestion is marked ok or not ok. Initially, of course, all suggestions are marked not ok.

Please now look through each suggestion carefully. Note that this suggestion was made a particular time in the past in response to a very particular state of the plant and its environment. In your assessment of the suggestion, please recall this situation. The questions to ask with each suggestion are

- Could all the listed changes have been implemented at all?

- Are there any reasons for not implementing any of the changes?

- What settings in the model need to be changed in order to prevent unrealistic changes from being suggested?

A suggestion is fairly self-explanatory. Please note that each suggestion only suggests to move the plant into a condition that it has already experienced at least once in the known history. This previous experience occured at a time that is labeled "Prior at" in the table. If you click on this timestamp, a comparison page will appear giving you the details of the current and this historical point so that you may compare them. This is useful for understanding what the model sees as a comparable condition and thus perhaps making modifications to the model or simply understanding where the suggestion comes from.

When you have checked through all the suggestions and collected a number of alterations to be made to the model, please actually make these alterations and retrain the model using the option of full recomputation. This will recompute all suggestions. Then go back to the fine tuning overview and now click on the button Update. This will now get the new suggestion for all the specially selected fine tuning suggestions you have already looked at.

Look though all the suggestions a second time. The ones marked ok, should still be ok. The ones marked not ok, could now have improved. If they are ok now, please mark them ok. This process should repeat until all suggestions in the fine-tuning overview are marked ok. At this point the model has been adjusted for a significant and representative sample of historical suggestions to be practical and good.

You can review your work done by clicking on Overview that will supply you with a quick count of the number of suggestions marked ok during every iteration of this process. You can also click on Report to get a full (and lengthy) report on the evolution of every suggestion and all your comments.

At the end of fine tuning, the model is ready to be used in real plant operations. We recommend using the model for a few days in probationary capacity just in case some feature only becomes apparent in current operations.

5. Intelligent Health Monitor (IHM)

The Intelligent Health Monitor (IHM) makes condition monitoring smart by bringing it into the age of machine learning. Normal condition monitoring suffers from giving false alarms, not alarming all bad conditions, and requiring significant human expertise to set up and maintain. IHM solves all three of these problems by providing effective, efficient and accurate methods to detect equipment health. This is done by changing the definition of health from human specified limit values for each tag separately to a holistic form based on the past performance of the equipment.

Unhealthy states are detected because the combination of various measurements is taken into account by the holistic modeling approach. For example, temperature, pressure and rotation rate give valuable information about whether the vibration is acceptable or not. Due to that approach false alarms are also avoided. As the method is based on historical data, maintenance engineers no longer have to specify, maintain and document alarm limits.

As a result of the increased reliability of health detection, the availability of the equipment and thus the plant increases. With the increased availability, the effective production capacity of the plant improves. Due to being able to proactively maintain the equipment, maintenance can increasingly be planned and thus becomes less expensive. As the equipment no longer fails but can be proactively repaired, collateral damage is avoided and significantly lowers maintenance expenditure.

5.1. Condition Monitoring

In operating technical equipment in an industrial plant, we are concerned whether this equipment is in good working order at any one time. Activities concerned with determining this health status are grouped under the term condition monitoring. This is generally approached by installing sensors on the equipment measuring quantities like vibration, temperature, pressure, flow rate and so on. These measurements are transmitted to the control system and usually stored in a data historian.

In between the control system and the historian, the data is analyzed to determine the health status of the equipment. For this purpose, one usually defines an upper and lower alarm limit for each tag of importance. If the measurement ever goes above the upper limit or below the lower limit, an alarm is released.

When an alarm is released, a maintenance engineer looks at the data and determines what, if anything, must be done. If the engineer determines that nothing must be done, the alarm is a false alarm. If the equipment is not in good working order but this status is not alarmed, this is called a missing alarm. Both are problematic. The false alarm is unwanted because it wastes time and resources. The missing alarm is dangerous because it will most likely result in an unplanned outage that may cause collateral damage and production loss.

The reason for both false and missing alarms is usually due to the simplistic nature of the analysis. The static nature of the upper and lower limit is not able to capture the complexity of diverse operating conditions of industrial equipment. In addition, the fact that each measurement is analyzed individually is a major drawback. All measurements on a single piece of equipment are obviously connected. By ignoring this natural connection, the analysis is throwing away a major source of information.

One may try to overcome the first defect by what is known as event framing. This is where one defines certain operating conditions of the equipment as belonging to one group and then supplies an upper and lower limit only for that group. For example, one may divide the data from a turbine into full load, half load and idle conditions by defining a condition on the rotation rate. While this is a certain improvement, it also increases the amount of manual work that must be done to setup and maintain the analysis. With plants having tens of thousands of measurements, this quickly becomes overwhelming and error prone.

We conclude that normal condition monitoring defines the health of a piece of equipment measurement-by-measurement through specifying limiting values based on human engineering expertise. This definition of health is limited in its effectiveness and requires significant human effort both initially as well as continually.

5.2. Concept of Dynamic Limits

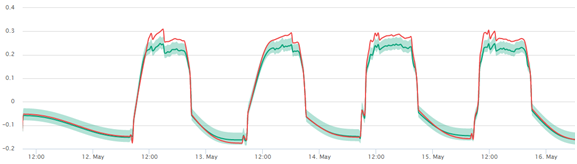

IHM constructs a machine learning model of one measurement time-series as a function of several other such time-series coming from one industrial plant or piece of equipment. If the underlying physics and chemistry of the industrial process remains the same over the long-term, then the modeling process will be able to deliver an accurate, precise and reliable model for the measurement concerned.

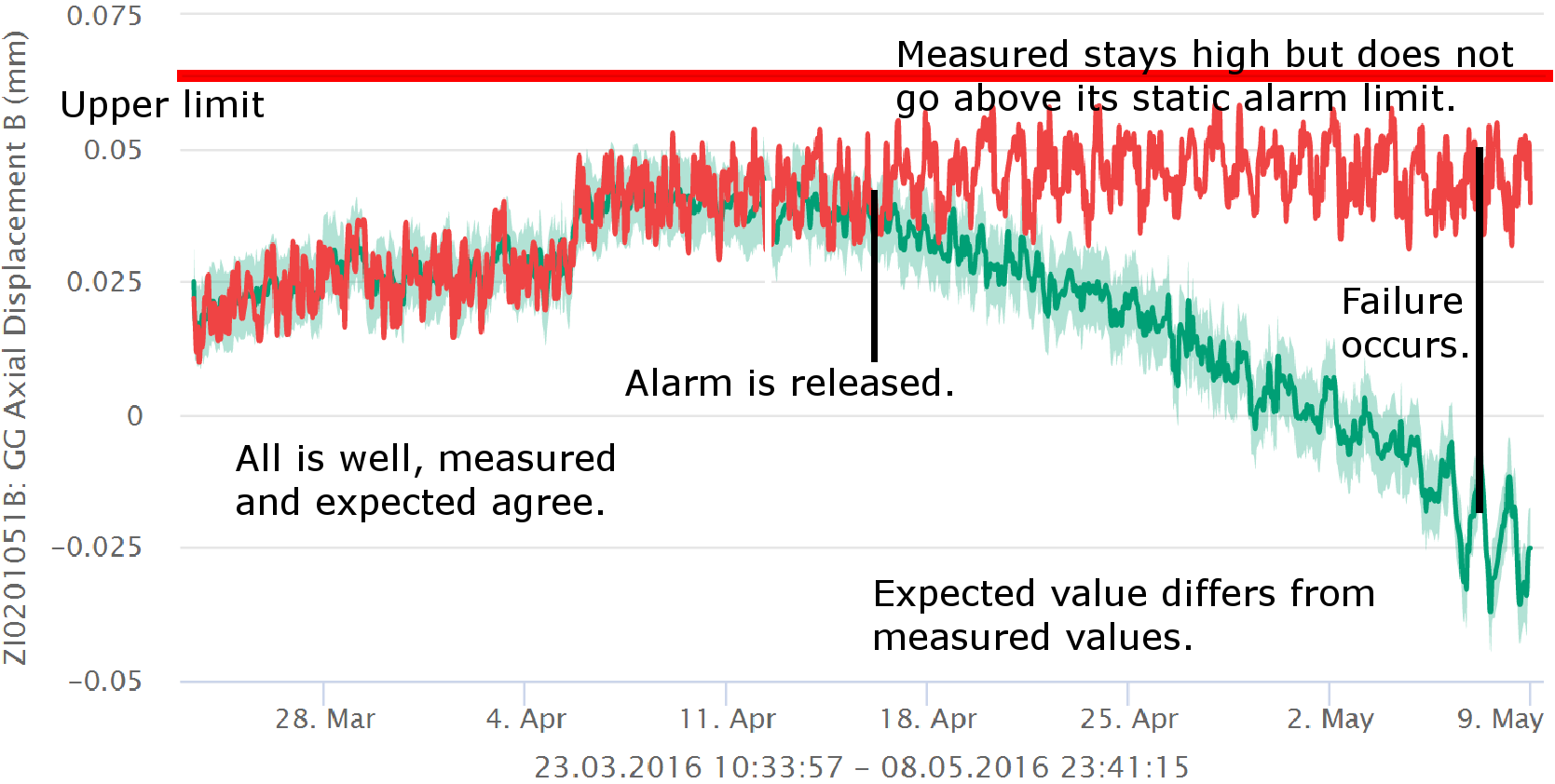

This model is constructed over a time period where the equipment is known to have been healthy. Therefore the model becomes the definition of health for the tag being modeled instead of the static limits in normal condition monitoring.

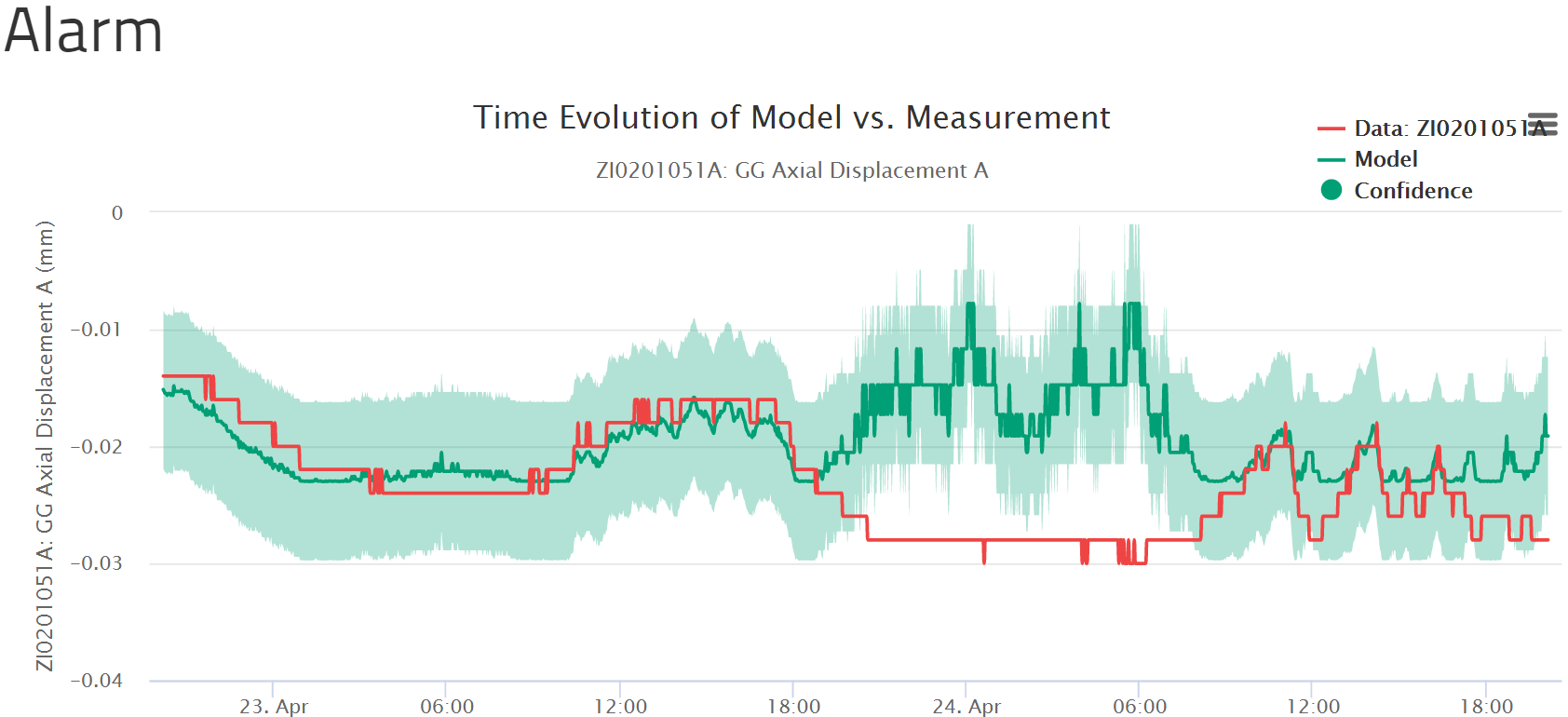

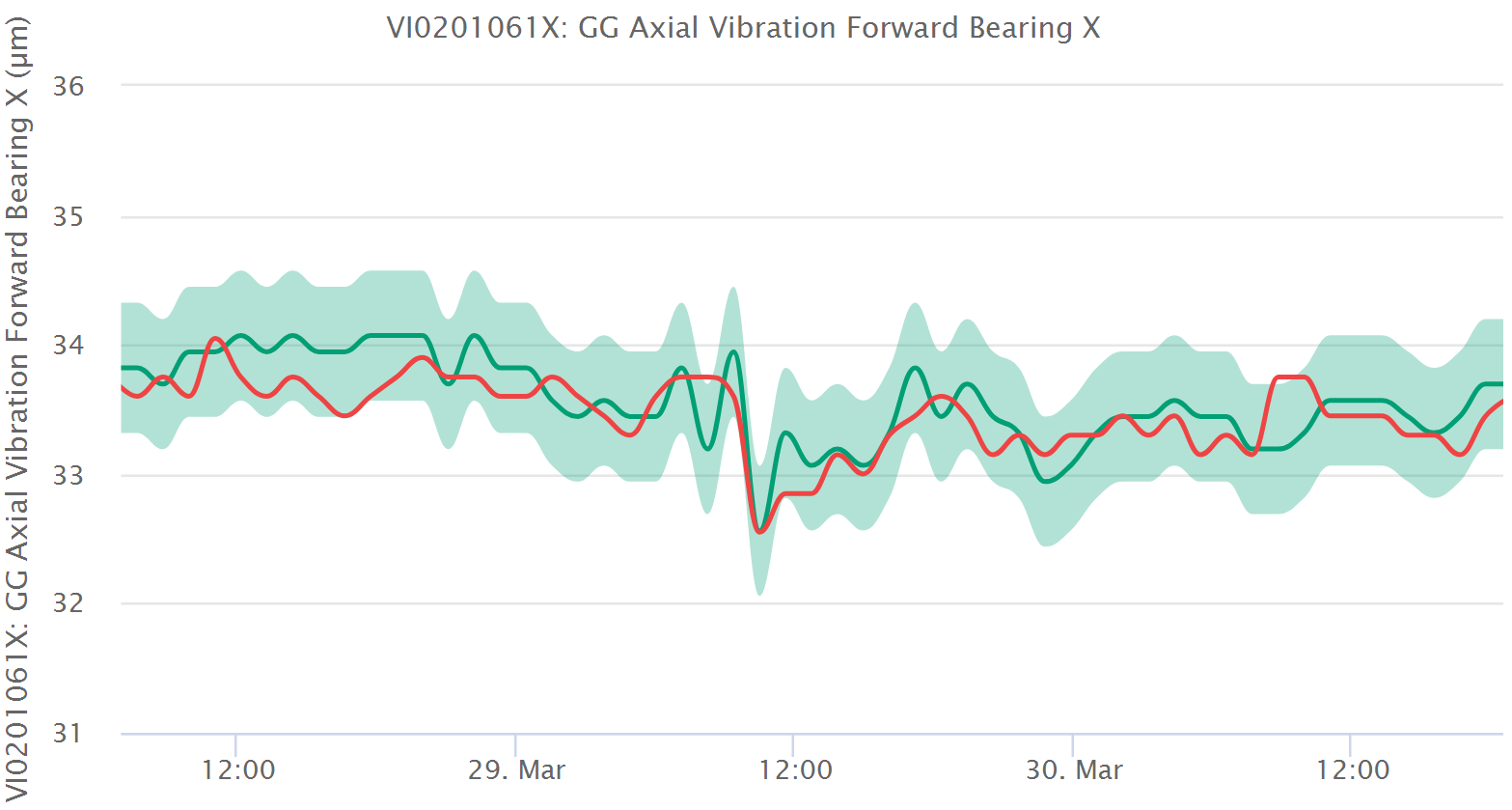

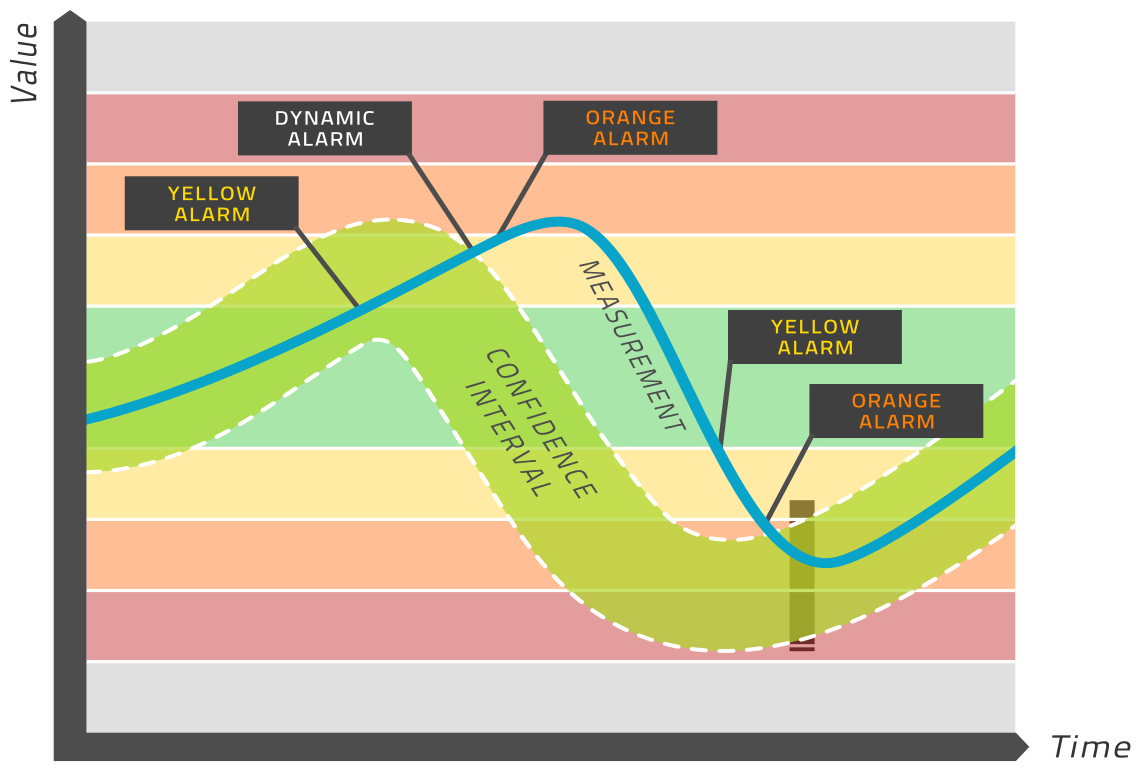

The model is evaluated in real-time and yields an expected value for each relevant tag. A confidence interval is computed around this expected value, which amounts to a range of values (light green band in the above picture) that are considered healthy. If the measured value lies within this band, then the equipment is healthy. If the measured value is outside this band, then an alarm is released. This is in sharp contrast to normal condition monitoring, where alarms are released based on limiting values that do not change over time, such as the normal yellow, orange, red regions that one can define in many condition monitoring tools. This is why we refer to this approach as dynamic limits. Please see the image above for a visual depiction of this process.

If you are interested in the model itself and how it is obtained, please see below in the section on mathematical background.

5.3. Concepts of IHM

Machine learning methods take empirical data that has been measured on this particular machine in the past when it was known to be healthy. From these data, the machine learning methods automatically and without human effort construct a mathematical representation of the relationships of all the parameters around the machine.

It can be proven mathematically that a neural network is capable of representing a complex data set with great accuracy as long as the network is large enough and the data consistently obeys the same laws. As the machine obeys the laws of nature, this assumption is easily true. We therefore use a neural network as the template for modeling each measurement on the machine in terms of the others. The machine learning algorithm finds the values for the model parameters such that the neural networks represent the data very accurately.

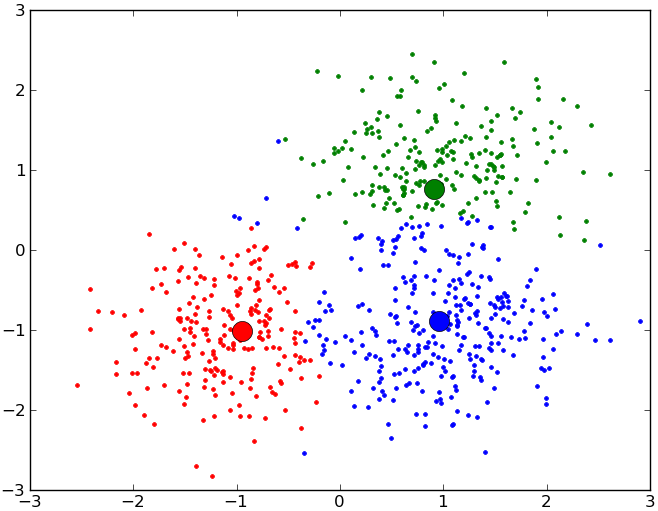

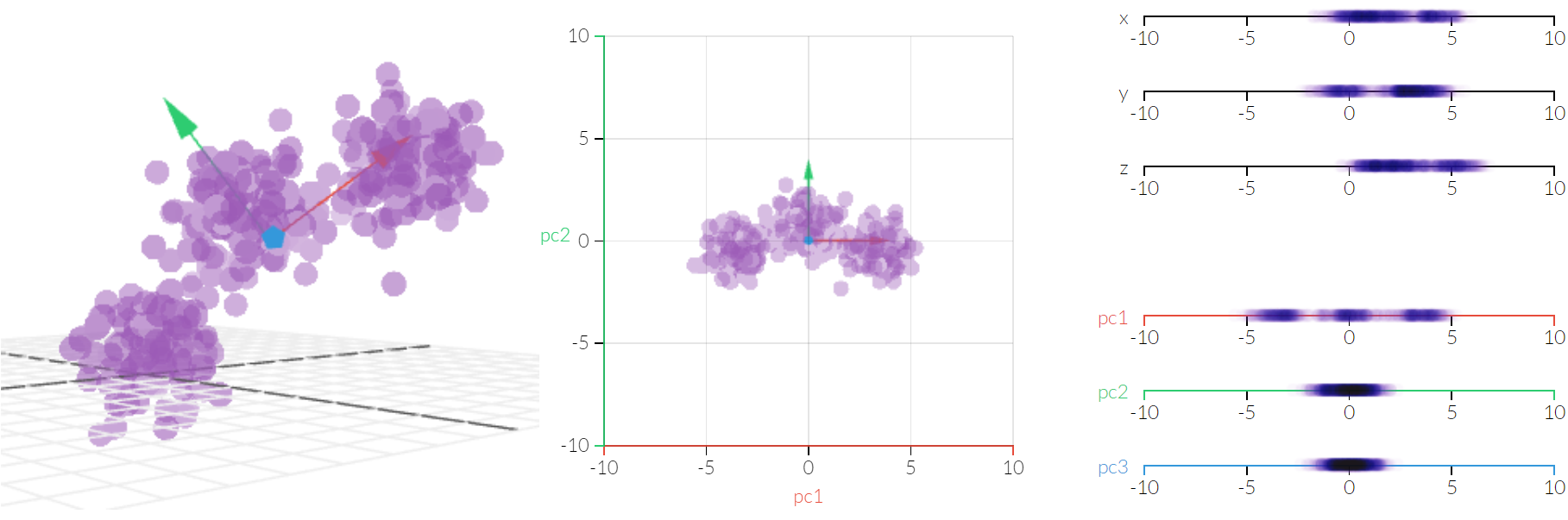

The selection of which measurements are important to take into consideration when modeling a particular measurement can also be done automatically. We use a combination of correlation modeling and principal component analysis to do this.

The result is that each tag on the machine gets a formula that can compute the expected value for this tag. As the formula was trained on data known to be healthy, this formula is the definition of health for this machine. Unhealthy conditions are then considered deviations from health.

It is important to model health and look for deviations from it because health is the normal condition and much data is available for normal healthy behavior. Rather little data is available for known unhealthy behavior and this small amount is very diverse because of a host of different failure modes. Failure modes differ for each make and model of a machine making a full characterization of possible faults very complex. Modeling poor health is not a problem of data analysis but rather data availability. As such, this problem is fundamental and cannot be tackled in a practical and comprehensive way.

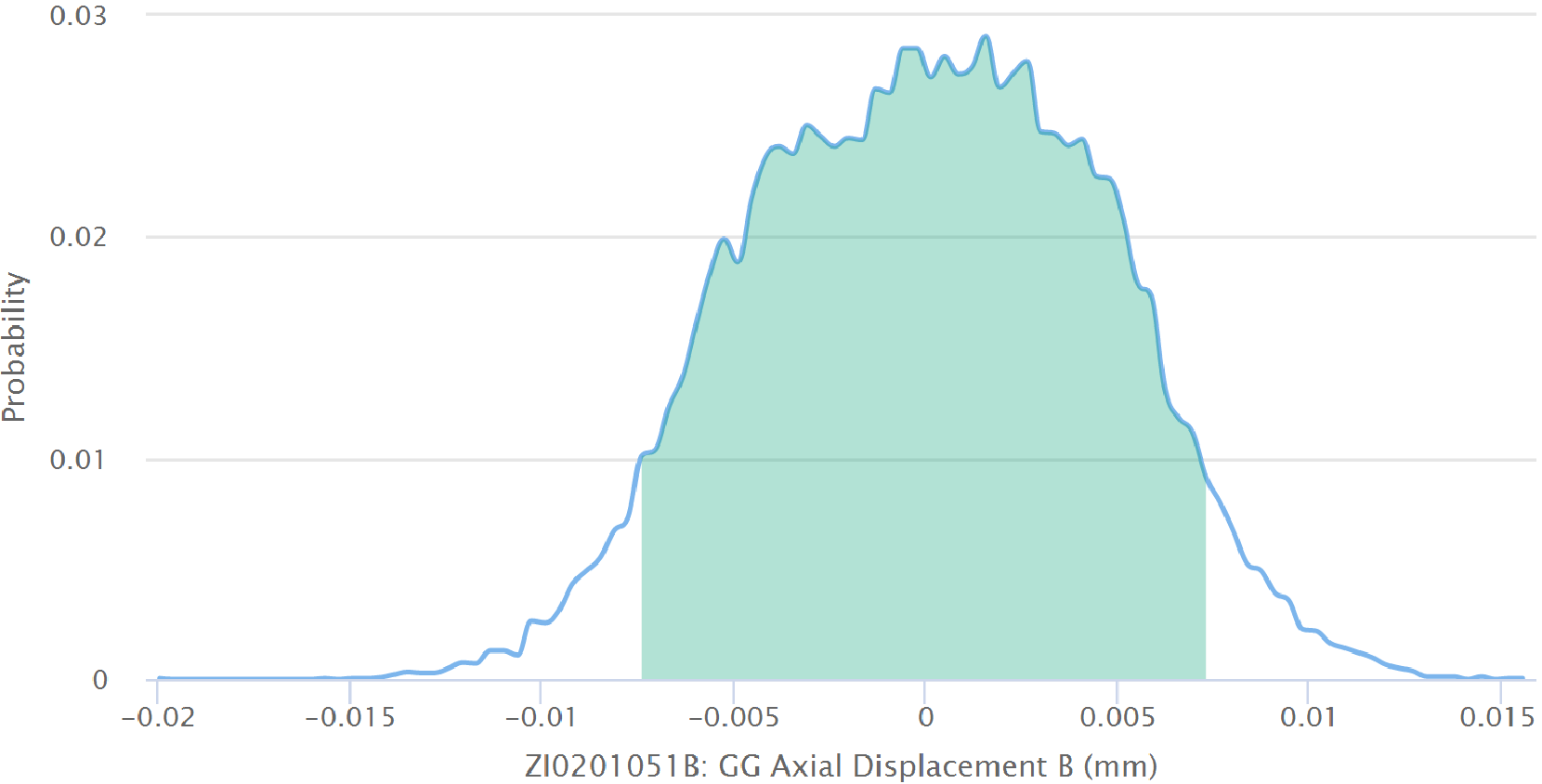

At any one time, we can compare the expected healthy value to the sensor value. As the expected value is computed from a model, we know the probability distribution of deviations, i.e. how likely is it that the measurement will be away from the expectation by a certain amount. So we may compute the probability of health from this distribution or conversely use this confidence interval in order to judge if the sensor value is too far away from health. If that is found to be the case, then an alarm is sent.

The alarm can be enriched by the information of how unhealthy the state is by providing the probability of poor health. Since the expected value has been computed from a (usually small) number of other machine parameters, it is generally possible to lay blame on some other measurement. This gives assistance to the human engineer receiving the alarm in the effort to diagnose the problem and design some action.

With normal condition monitoring, in practice, it is often found that when a machine transits from one stable state to another, lots of (false) alarms are released because a simple analysis approach cannot keep up with the quickly changing conditions. As a neural network can easily represent highly non-linear relationships, even a startup or load change of a machine will be modeled accurately without alarm if everything is as it should be.

5.4. Initial Putting into Service

After installing IHM on your system, you will find a menu on the interface page. One of the entries is called Wizards and among them is the IHM Setup wizard. If you click on this, you will be guided through a workflow to generate a new dataset with model and dynamic limits.

First, you generate a new plant. While this is called a plant, you may want to create a new plant for each major piece of equipment. The decision which data points belong into a single analysis framework, here referred to as a plant, is up to you and depends on how connected these parts are. The IHM software can handle multiple plants.

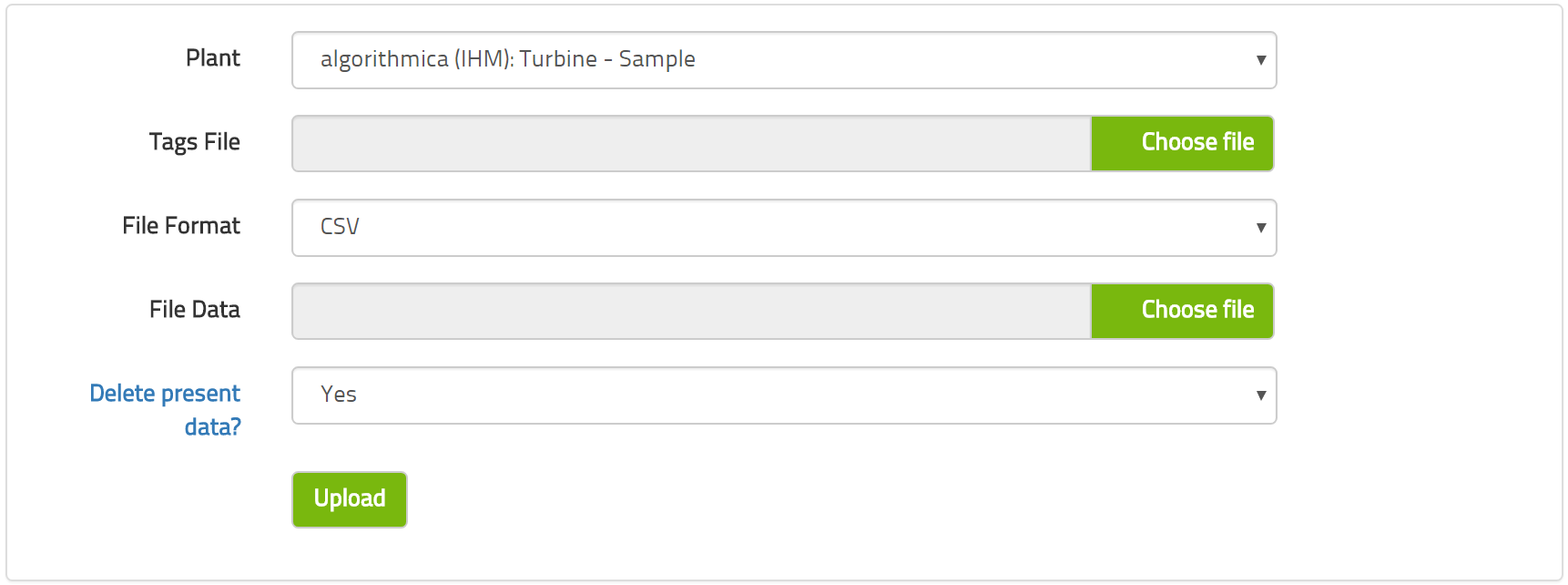

Second, you define the measurements and their metadata. This is explained in the wizard in detail. You may enter this information directly in the form on the page or you may prepare the information externally and put it into a file.

Third, you will upload the historical data that you have exported from your data historian. Here you will also upload the metadata if you have not manually typed it into the online form before.

Fourth, there will be a plausibility check for the metadata. If there are any implausibilities, the interface will explain what they are and how to fix them. This essentially detects any typos in the ranges of values and so on to make sure that everything makes numerical sense.

Fifth, you can enter the time periods that the equipment was known to be healthy and any exclude conditions. These two wizard entries will apply to all models for this dataset. You can, if you wish, customize these two pieces of information for each model individually. It is far more efficient to do it here globally. It makes sense that the times of health apply to the whole equipment and that exclude conditions also apply for the whole equipment. Thus, we recommend that you only customize these concepts for each model in exceptional circumstances.

On this last page, there is a button to model everything. If you click on this button, the computer will automatically select the independent variables, train and apply the model to the historical data for every single model requested in the metadata. Depending on the number of models requested and the amount of training data, this may take a substantial period of time. We recommend pressing this button at the end of a working day or even on a Friday afternoon so that the computer may work for many hours without disturbing you during any other items of work.

5.5. Creating or Customizing the Models

We recommend following the IHM setup wizard in order to initially work with a new dataset. It is recommended to have a look at each model to check if it is good or not. Instructions for goodness of fit can be found in the section on mathematical background.

When you enter the edit page for a particular model, you will find that you can modify the training times and add exclusion criteria. We recommend that you do not do this but rather edit this information globally for the entire dataset using the IHM wizard. Changing this information for an individual model should be done only in exceptional circumstances.

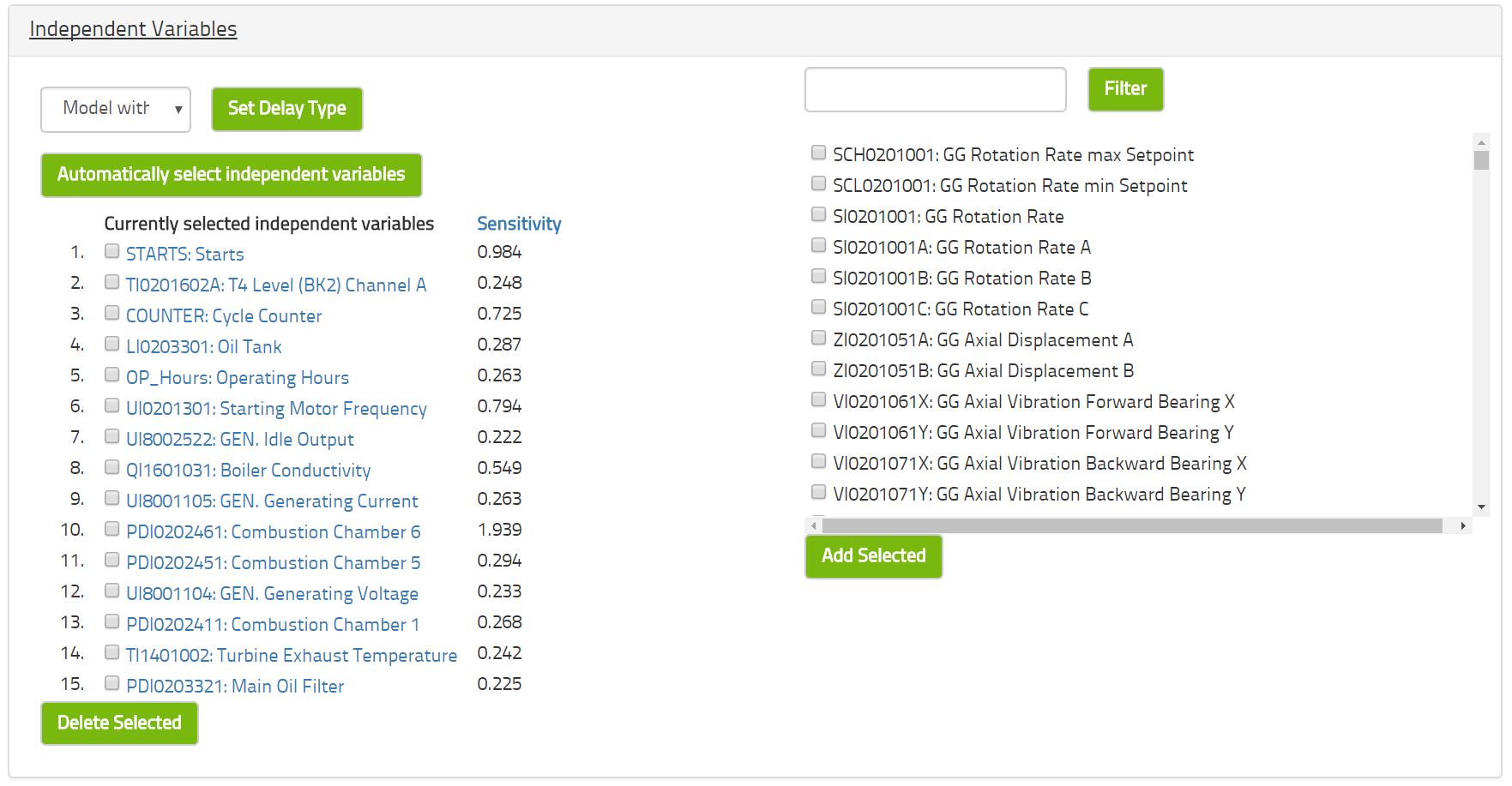

You may alter the selection of variables by either deleting or adding variables to the list of independent variables. You may also change more advanced settings such as the confidence level desired or the number of neurons in the neural network. The confidence level defaults to 0.9 and the number of neurons defaults to twice the number of independent variables. Please see the section on mathematical background for more details on these concepts.

Having made these changes, you will find three buttons at the bottom of the page. They will model, apply or do both (model and apply). Model means that the model will be retrained using the current settings and stored in the database. Apply means that the model will be executed on the historical data thus recomputing historical results and alarms. Generally, you will want to both model and apply.

If you only model, the historical results and alarms will be kept. This is interesting only if you have already been working with the system in an online capacity and need to retain the historical alarms for reporting purposes. If you only apply, then the model will be executed as it is. If the model has not changed, this will just reproduce the same results but will first delete the alarm history alongside and any manual alarm editing that you may have done.

5.6. Getting and Administrating the Alarms

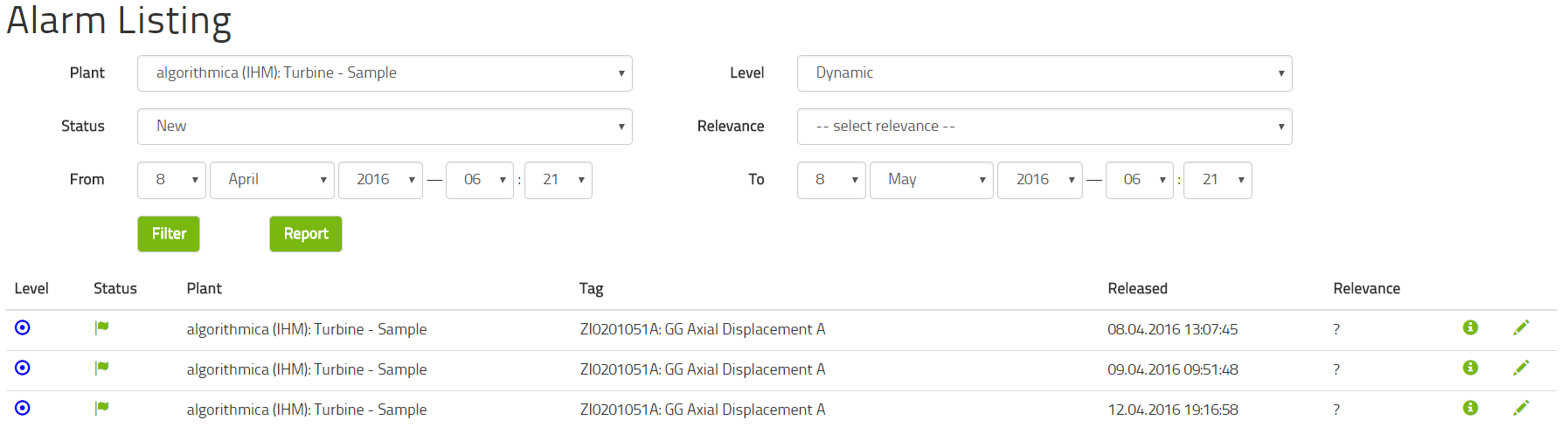

Having modeled and applied the model, the application will have computed any historical alarms. These can be looked at using the menu for IHM and Alarms. To select the right alarm you are interested in, you may use several filters and then obtain a list of alarms.

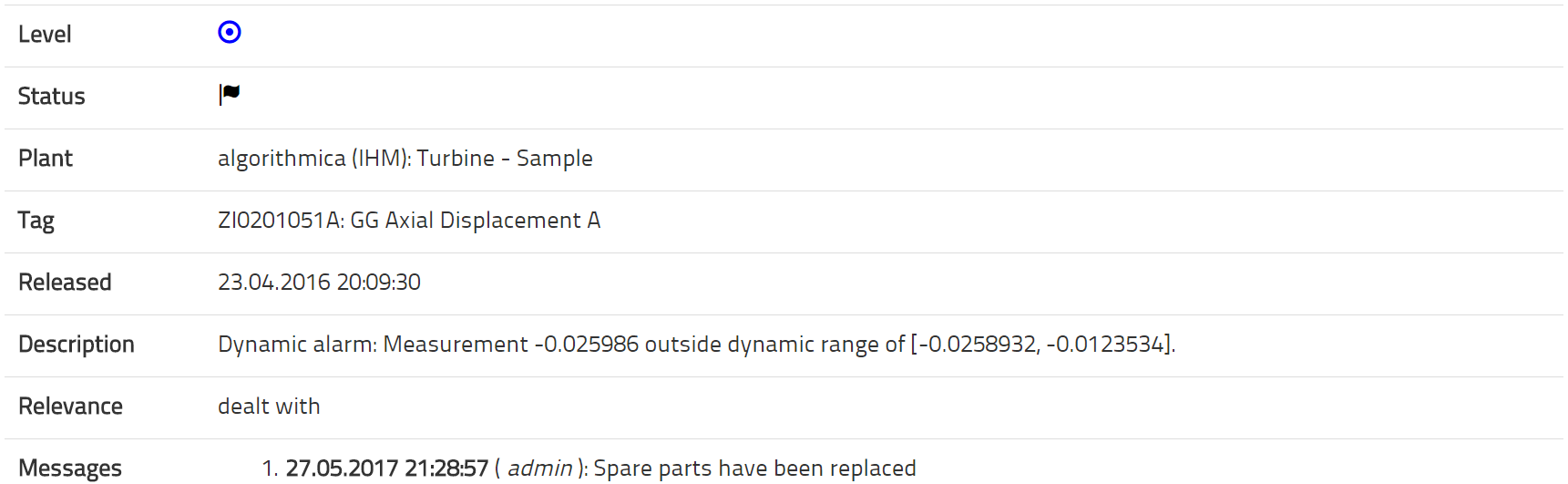

If you click on any one alarm, you will see a brief report about the alarm including a relevant chart of the alarm for 24 hours before and after the alarm.

If you click on edit, you may change the category of the alarm and add messages. This information can be retrieved by looking at this alarm or producing an alarm report that may be useful for periodic documentation.

6. Intelligent Soft Sensor (ISS)

Sometimes it is difficult or expensive to measure a quantity of interest by a sensor installed in the process. In that case, we might opt to measure it manually by a hand-held sensor, a portable devise or by taking a sample and having it investigated in the laboratory. This manual process becomes tedious and expensive in its own right after some time. Additionally, the value only becomes available when we perform this process and even then only with a delay.

A soft sensor is a piece of software intended to replace the manual measurement process by computation. The value is available in real-time without making any manual effort. This can be used to compute process efficiency, gas chromatography, pollutants or any other quantity hard to measure.

The soft sensor is a essentially a formula that computes the quantity of interest from other quantities that can be measured in the normal way. The formula itself is determined using machine learning from the data collected by hand. First, the computer determines which process values are necessary to compute the interesting one. Second, the data is collected and a model is trained. Then the model quality can be checked and the model can be improved, if necessary. Once the model is good, it can be deployed computationally in order to look just like any other sensor.

6.1. Creating a new Soft Sensor

In the ISS menu, you can access the list of all soft sensor present in your plant. The button at the bottom will lead you to a page for creating a new soft sensor. The tag is the tag that contains the manually collected data for the quantity of interest that you want to model. The new tag is the alphanumeric nomenclature for the new tag that will be the result of the formula about to be constructed. We recommend that this nomenclature be very similar to the nomenclature of the original tag but it must be a unique identifier for this plant. When you click on Create, not only will a new soft sensor object be generated but a new column will be added to the table of historical data for your plant. This new column is for the computed data from the new soft sensor.

You will be taken to the edit page for the soft sensor. First, you will need to define one or more training time periods that select the historical data on which the soft sensor is to be trained. From these time periods, any points will be excluded that match any of the exclude conditions that you can also define here. Both of these elements together will result in a list of data points that will be used for training.

Next, comes the selection of independent variables. These are the variables that go into the formula that will eventually compute the value of the soft sensor. If you know what tags influence the quantity of interest, you can manually select them and add them to the model. However, we do not recommend that you do so. There is a button to perform this selection automatically by virtue of data analysis. It is preferable to do any manual editing after the automatic selection process.

Having selected the variables that are going to go into the model, we may edit some more parameters of the model. The soft sensor can be given a name and you can write a comment text on it, if you would like. More importantly, you can edit the number of hidden neurons. The formula for the soft sensor will be a neural network. Hidden neurons are essentially the free parameters in this formula. The more hidden neurons one has, the more free parameters one has. Having more parameters usually allows the model to fit the training data better but only up to a point. There is a law of deminishing returns at work here and also there comes a point at which the number of parameters is so large that the training data can be memorized rather than learned. At this point, the model can reproduce past data with perfection but it cannot generalize to a new situation at all well. We recommend choosing a number of hidden neurons equal to approximately twice the number of independent variables.

After setting all this up, you are ready to construct the model. The button Model trains the model, the button Apply uses the model, computes the value of the soft sensor for the entire available history in the database and saves these values there, and the button Model and Apply does both of these things in one operation. We recommed to model and apply together. You can then go to the show page in order to examine how good the soft sensor is.

6.2. Assessing Model Quality

Navigate to the show page for your soft sensor. You will be taken there automatically after you have pushed the model and apply button on the edit page or you can select the show link on the list of soft sensors accessible through the ISS menu.

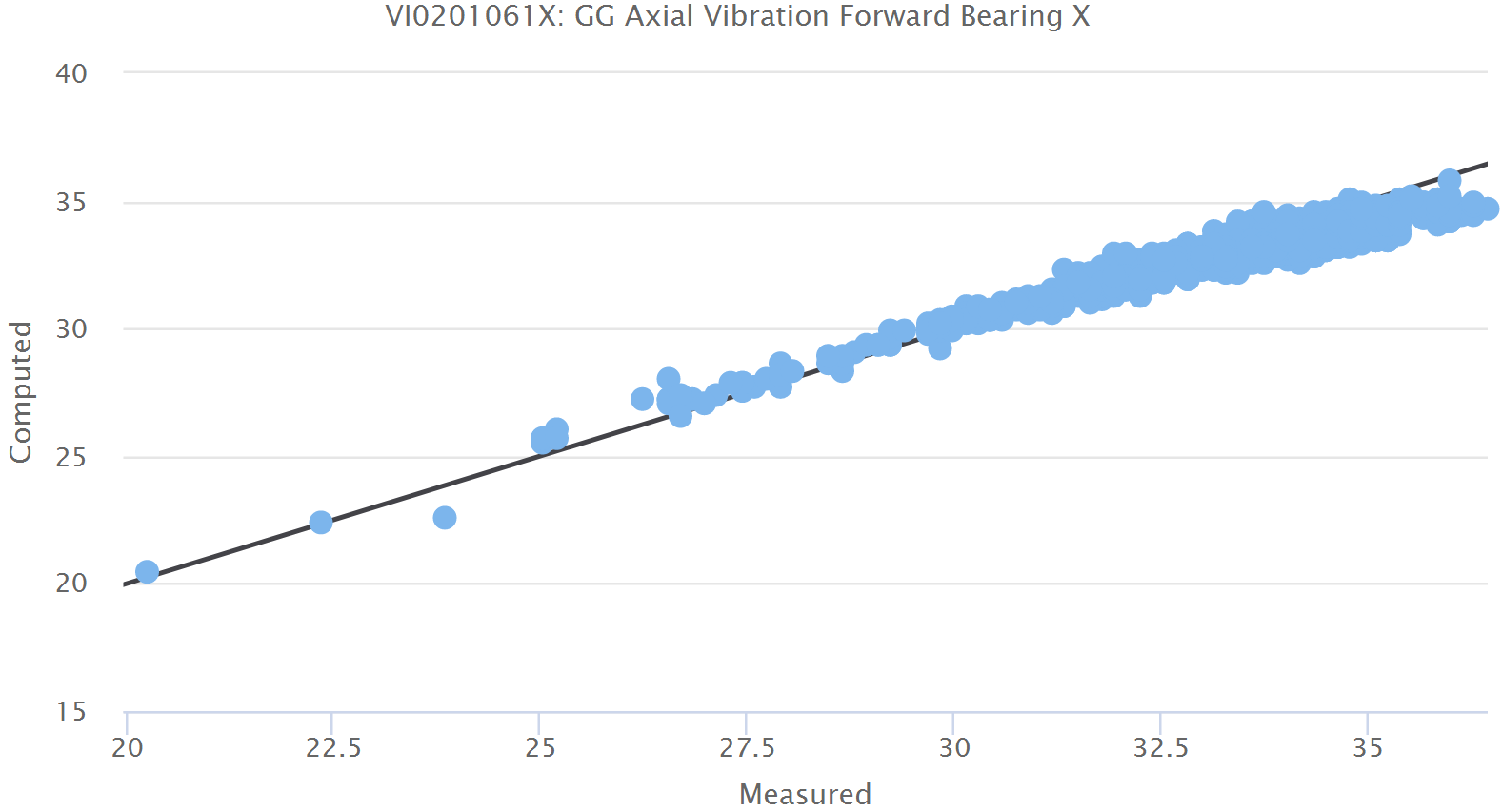

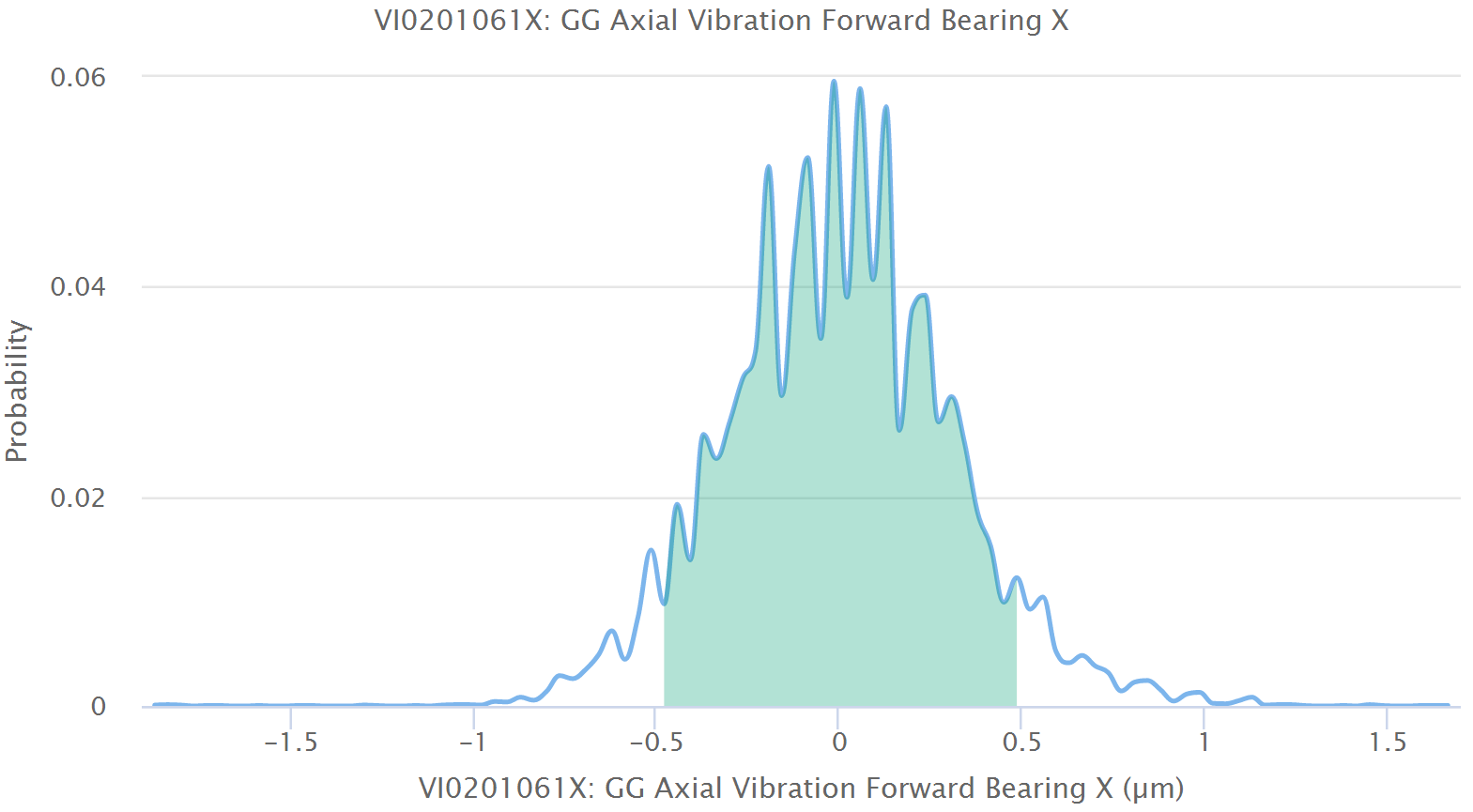

On the top of the page, you can select a time period for the time series display. This will select data for the original tag and the soft sensor tag over this time period and display it in two ways. The top diagram will be a simple time-series plot and the second diagram will plot the measured value against the computed value. Ideally the two tags will have equal values. Realistically, they will differ at least by some inherent random variation that is a result of the measurement process. On the plot of both tags versus each other, you will find a straight black line. This is the ideal line you would get if both values were always perfectly equal. Based on this line, you can judge the degree to which they agree.